Back up Cassandra on Kubernetes

Follow the procedures in this topic to create pre and post backup rules with Portworx Backup, which take application-consistent backups for Cassandra on Kubernetes in production.

By default, Cassandra is resilient to node failures. However, you need Cassandra backups to recover from the following scenarios:

- Unauthorized deletions

- Major failures that need your cluster rebuild

- Corrupt data

- Point-in-time rollbacks

- Disk failure

Cassandra provides an internal snapshot mechanism to take backups using a tool called nodetool. You can configure this tool to provide incremental or full snapshot-based backups of the data on the node. The nodetool flushes data from memtables to disk and create a hardlink to the SSTables file on the node.

However, disadvantages of this method are that you must run nodetool on each and every Cassandra node, and it keeps data locally, increasing the overall storage footprint. Portworx by Pure Storage suggests taking a backup of the Cassandra PVCs at a block level and storing them in a space-efficient object storage target. Portworx allows you to combine techniques that are recommended by Cassandra, such as flushing data to disk with pre and post-backup rules for the application to provide you Kubernetes-native and efficient backups of Cassandra data.

Portworx Backup allows you to set up pre and post backup rules that are applied before and or after a backup occurs. For Cassandra, you can create a custom flush, compaction, or verify rule to ensure a healthy and consistent dataset before and after a backup occurs. Rules can run on one or all pods associated with Cassandra, which is a requirement for nodetool commands.

For more information on how to run Cassandra on Kubernetes, refer to the Cassandra on Kubernetes on Portworx topic.

Prerequisites

- Cassandra pods must also be using the

app=cassandralabel. - This example uses the cassandra

newkeyspacekeyspace. If you want to use this rule for another keyspace, then replace keyspace with your own keyspace value.

Create rules for Cassandra

The following procedures explain how you can create rules for Cassandra that runs both before and after the backup operation executes.

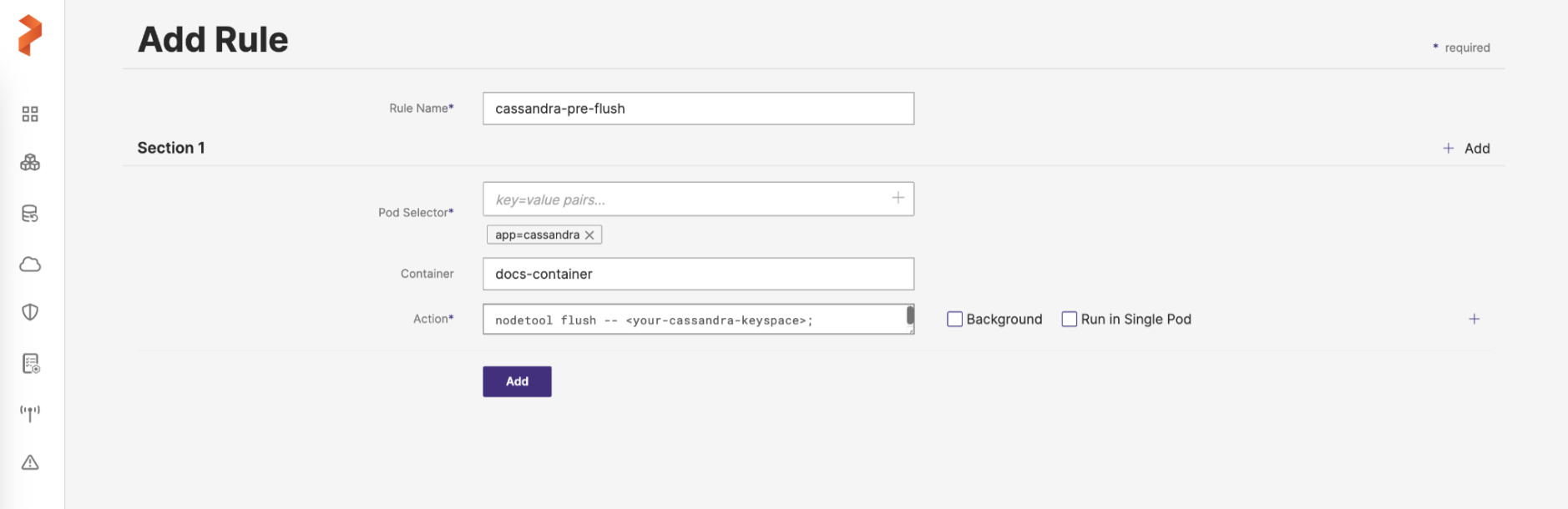

Create a pre-exec backup rule for Cassandra

Create a rule that runs nodetool flush for the newkeyspace before the backup. This is essential as Portworx takes a snapshot of the backing volume before it places that data in the backup target.

-

Login and access Portworx Backup home page.

-

In the left navigation pane, click Rules > Create Rules > Add Rule:

-

In Add Rule page, provide the following details:

-

Rule name: add a name for your backup rule

-

Pod Selector: add the following app label

app=cassandra -

Container: This field is mandatory if you're using mTLS with the Linkerd service mesh, provide the container name. Otherwise, leave this field blank.

-

Action: add the following action

nodetool flush -- <your-cassandra-keyspace>;

-

-

Click Add.

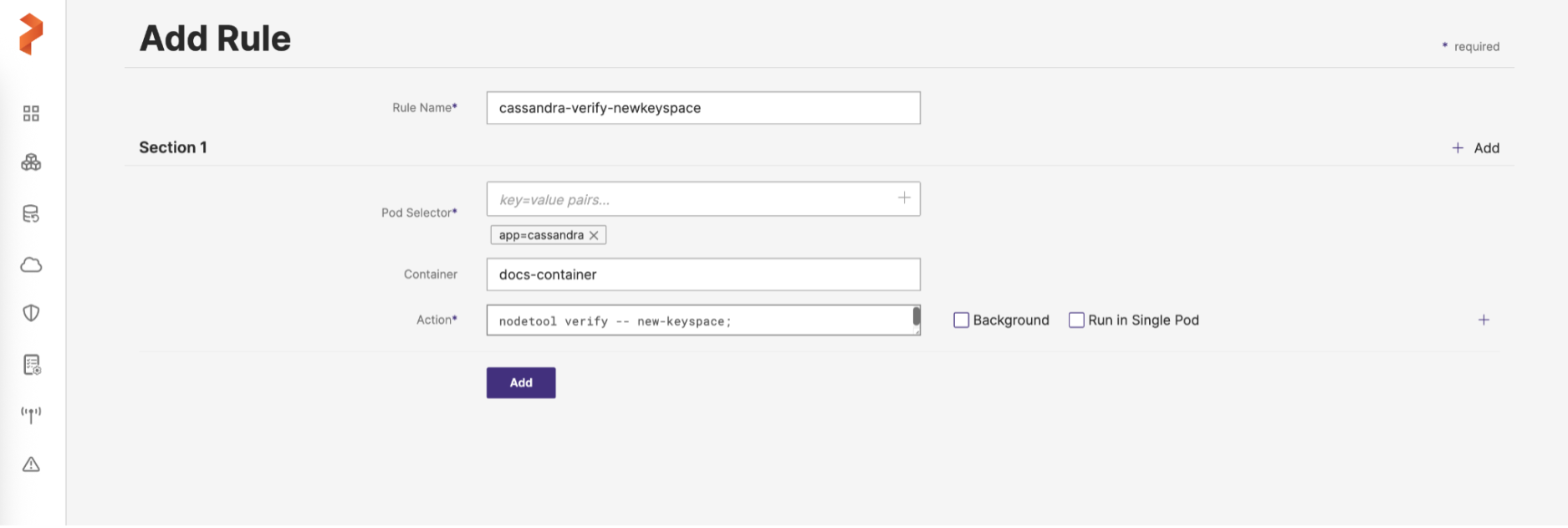

Create a post-exec backup rule for Cassandra

A post-exec backup rule for Cassandra is not important as the pre-exec backup rules mentioned above. However, for completeness in production and to verify a keyspace is not corrupt after the backup occurs, create a rule that runs nodetool verify. The verify command validates (check data checksums for) one or more tables.

-

From the home page, navigate to Settings > Rules > Add New.

-

In the Add Rule window, provide the following details:

-

Rule name: add a name for your backup rule

-

Pod Selector: add the following app label

app=cassandra -

Container: This field is mandatory if you're using mTLS with the Linkerd service mesh, provide the container name. Otherwise, leave this field blank.

-

Action: add the following action

nodetool verify -- <your-cassandra-keyspace>;

-

-

Click Add.

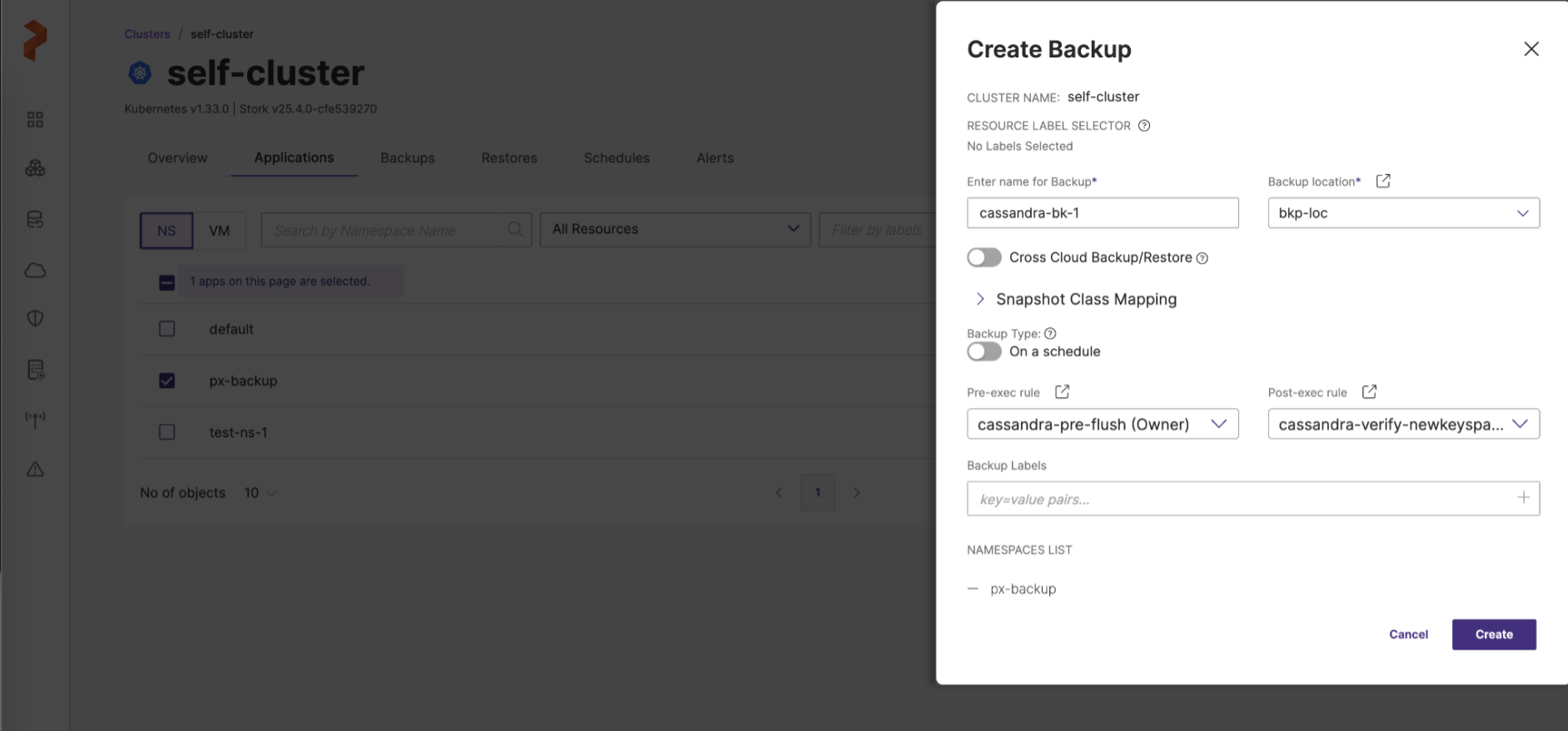

Use rules during Cassandra backup operations

- During the backup creation process, select the rules in the pre-exec and post-exec drop-down lists:

- After you enter all the information in the Create Backup window, click Create. For more information on how to create a backup, refer Create a backup

Demo

Watch this short demo of the above information: