Portworx install on PKS on vSphere using local datastores

The deployment model on this page is still in alpha stage. If possible, Portworx by Pure Storage recommends you use Portworx install on PKS on vSphere using shared datastores

Pre-requisites

- This page assumes you have a running etcd cluster. If not, return to Install on PKS.

Architecture

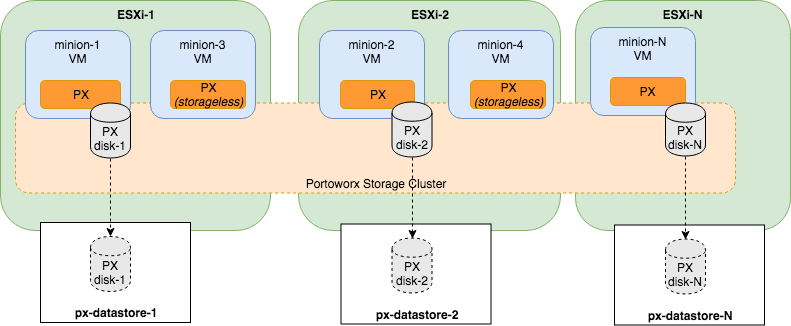

Below diagram gives an overview of the Portworx architecture for PKS on vSphere using local datastores.

- Portworx runs as a Daemonset hence each Kubernetes minion/worker will have the Portworx daemon running.

- A Single Portworx instance on each ESXi host will create its disk on the configured Datastore. So if there are 2 worker VMs on a single ESXi, Portworx instance on the first worker VM will create and manage the disks. Subsequent Portworx instances on that ESXi host will still be part of the cluster and will function as storageless nodes.

- Portworx will aggregate all of the disks and form a single storage cluster. End users can carve PVCs (Persistent Volume Claims), PVs (Persistent Volumes) and Snapshots from this storage cluster.

- Portworx tracks and manages the disks that it creates. So in a failure event, if a new VM spins up, Portworx on the new VM will be able to attach to the same disk that was previously created by the node on the failed VM.

ESXi datastore preparation

On each ESXi host in the cluster, create a local datastore which is dedicated for Portworx storage. Use a common prefix for the names of the datastores. We will be giving this prefix during Portworx installation.

Portworx installation

- Create a secret.

- Deploy the Portworx spec.

Once you have the spec, proceed below.

Wipe Portworx installation

Below are the steps to wipe your entire Portworx installation on PKS.

- Run cluster-scoped wipe:

curl -fsL "https://install.portworx.com/px-wipe" | bash -s -- -T pks

- Go to each virtual machine and delete the additional vmdks Portworx created.

References

Secret for vSphere credentials

The Portworx spec

Things to replace in the below spec to match your environment:

-

PX etcd endpoint in the -k argument.

-

Cluster ID in the -c argument. Choose a unique cluster ID.

-

VSPHERE_VCENTER: Hostname of the vCenter server.

-

VSPHERE_DATASTORE_PREFIX: Prefix of the ESXi datastores that Portworx will use for storage.

apiVersion: v1

kind: ServiceAccount

metadata:

name: px-account

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-get-put-list-role

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["watch", "get", "update", "list"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["delete", "get", "list"]

- apiGroups: [""]

resources: ["persistentvolumeclaims", "persistentvolumes"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "list", "update", "create"]

- apiGroups: ["extensions"]

resources: ["podsecuritypolicies"]

resourceNames: ["privileged"]

verbs: ["use"]

- apiGroups: ["portworx.io"]

resources: ["volumeplacementstrategies"]

verbs: ["get", "list"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-role-binding

subjects:

- kind: ServiceAccount

name: px-account

namespace: kube-system

roleRef:

kind: ClusterRole

name: node-get-put-list-role

apiGroup: rbac.authorization.k8s.io

---

kind: Service

apiVersion: v1

metadata:

name: portworx-service

namespace: kube-system

labels:

name: portworx

spec:

selector:

name: portworx

ports:

- name: px-api

protocol: TCP

port: 9001

targetPort: 9001

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: portworx

namespace: kube-system

spec:

selector:

matchLabels:

name: portworx

minReadySeconds: 0

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

name: portworx

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: px/enabled

operator: NotIn

values:

- "false"

- key: node-role.kubernetes.io/master

operator: DoesNotExist

hostNetwork: true

hostPID: true

containers:

- name: portworx

image: portworx/oci-monitor:2.0.2.1

imagePullPolicy: Always

args:

["-c", "px-pks-demo-1", "-k", "etcd:http://PUT-YOUR-ETCD-HOST:PUT-YOUR-ETCD-PORT", "-x", "kubernetes", "-s", "type=lazyzeroedthick", "--keep-px-up"]

env:

- name: "PX_TEMPLATE_VERSION"

value: "v2"

- name: "PRE-EXEC"

value: 'if [ ! -x /bin/systemctl ]; then apt-get update; apt-get install -y systemd; fi'

- name: VSPHERE_VCENTER

value: pks-vcenter.k8s-demo.com

- name: VSPHERE_VCENTER_PORT

value: "443"

- name: VSPHERE_DATASTORE_PREFIX

value: "px-datastore"

- name: VSPHERE_INSTALL_MODE

value: "local"

- name: VSPHERE_INSECURE

value: "true"

- name: VSPHERE_USER

valueFrom:

secretKeyRef:

name: px-vsphere-secret

key: VSPHERE_USER

- name: VSPHERE_PASSWORD

valueFrom:

secretKeyRef:

name: px-vsphere-secret

key: VSPHERE_PASSWORD

livenessProbe:

periodSeconds: 30

initialDelaySeconds: 840 # allow image pull in slow networks

httpGet:

host: 127.0.0.1

path: /status

port: 9001

readinessProbe:

periodSeconds: 10

httpGet:

host: 127.0.0.1

path: /health

port: 9015

terminationMessagePath: "/tmp/px-termination-log"

securityContext:

privileged: true

volumeMounts:

- name: dockersock

mountPath: /var/run/docker.sock

- name: etcpwx

mountPath: /etc/pwx

- name: optpwx

mountPath: /opt/pwx

- name: proc1nsmount

mountPath: /host_proc/1/ns

- name: sysdmount

mountPath: /etc/systemd/system

restartPolicy: Always

serviceAccountName: px-account

volumes:

- name: dockersock

hostPath:

path: /var/vcap/sys/run/docker/docker.sock

- name: etcpwx

hostPath:

path: /var/vcap/store/etc/pwx

- name: optpwx

hostPath:

path: /var/vcap/store/opt/pwx

- name: proc1nsmount

hostPath:

path: /proc/1/ns

- name: sysdmount

hostPath:

path: /etc/systemd/system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: portworx-pvc-controller-account

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: portworx-pvc-controller-role

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["create","delete","get","list","update","watch"]

- apiGroups: [""]

resources: ["persistentvolumes/status"]

verbs: ["update"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "update", "watch"]

- apiGroups: [""]

resources: ["persistentvolumeclaims/status"]

verbs: ["update"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["create", "delete", "get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["endpoints", "services"]

verbs: ["create", "delete", "get"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list"]

- apiGroups: [""]

resources: ["events"]

verbs: ["watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "patch", "update"]

- apiGroups: [""]

resources: ["serviceaccounts"]

verbs: ["get"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "create", "update"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: portworx-pvc-controller-role-binding

subjects:

- kind: ServiceAccount

name: portworx-pvc-controller-account

namespace: kube-system

roleRef:

kind: ClusterRole

name: portworx-pvc-controller-role

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

labels:

tier: control-plane

name: portworx-pvc-controller

namespace: kube-system

spec:

selector:

matchLabels:

name: portworx-pvc-controller

replicas: 3

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

labels:

name: portworx-pvc-controller

tier: control-plane

spec:

containers:

- command:

- kube-controller-manager

- --leader-elect=true

- --address=0.0.0.0

- --controllers=persistentvolume-binder,persistentvolume-expander

- --use-service-account-credentials=true

- --leader-elect-resource-lock=configmaps

image: gcr.io/google_containers/kube-controller-manager-amd64:v1.10.4

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10252

scheme: HTTP

initialDelaySeconds: 15

timeoutSeconds: 15

name: portworx-pvc-controller-manager

resources:

requests:

cpu: 200m

hostNetwork: true

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "name"

operator: In

values:

- portworx-pvc-controller

topologyKey: "kubernetes.io/hostname"

serviceAccountName: portworx-pvc-controller-account

---

apiVersion: v1

kind: ConfigMap

metadata:

name: stork-config

namespace: kube-system

data:

policy.cfg: |-

{

"kind": "Policy",

"apiVersion": "v1",

"extenders": [

{

"urlPrefix": "http://stork-service.kube-system.svc:8099",

"apiVersion": "v1beta1",

"filterVerb": "filter",

"prioritizeVerb": "prioritize",

"weight": 5,

"enableHttps": false,

"nodeCacheCapable": false

}

]

}

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: stork-account

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: stork-role

rules:

- apiGroups: [""]

resources: ["pods", "pods/exec"]

verbs: ["get", "list", "delete", "create"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

- apiGroups: ["stork.libopenstorage.org"]

resources: ["rules"]

verbs: ["get", "list"]

- apiGroups: ["stork.libopenstorage.org"]

resources: ["migrations", "clusterpairs", "groupvolumesnapshots"]

verbs: ["get", "list", "watch", "update", "patch"]

- apiGroups: ["apiextensions.k8s.io"]

resources: ["customresourcedefinitions"]

verbs: ["create", "get"]

- apiGroups: ["volumesnapshot.external-storage.k8s.io"]

resources: ["volumesnapshots"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: ["volumesnapshot.external-storage.k8s.io"]

resources: ["volumesnapshotdatas"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get", "create", "update"]

- apiGroups: [""]

resources: ["services"]

verbs: ["get"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["*"]

resources: ["deployments", "deployments/extensions"]

verbs: ["list", "get", "watch", "patch", "update", "initialize"]

- apiGroups: ["*"]

resources: ["statefulsets", "statefulsets/extensions"]

verbs: ["list", "get", "watch", "patch", "update", "initialize"]

- apiGroups: ["*"]

resources: ["*"]

verbs: ["list", "get"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: stork-role-binding

subjects:

- kind: ServiceAccount

name: stork-account

namespace: kube-system

roleRef:

kind: ClusterRole

name: stork-role

apiGroup: rbac.authorization.k8s.io

---

kind: Service

apiVersion: v1

metadata:

name: stork-service

namespace: kube-system

spec:

selector:

name: stork

ports:

- protocol: TCP

port: 8099

targetPort: 8099

---

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

labels:

tier: control-plane

name: stork

namespace: kube-system

spec:

selector:

matchLabels:

name: stork

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

replicas: 3

template:

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

labels:

name: stork

tier: control-plane

spec:

containers:

- command:

- /stork

- --driver=pxd

- --verbose

- --leader-elect=true

imagePullPolicy: Always

image: openstorage/stork:2.1.1

resources:

requests:

cpu: '0.1'

name: stork

hostPID: false

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "name"

operator: In

values:

- stork

topologyKey: "kubernetes.io/hostname"

serviceAccountName: stork-account

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: stork-snapshot-sc

provisioner: stork-snapshot

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: stork-scheduler-account

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: stork-scheduler-role

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "update"]

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["get"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "patch", "update"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["create"]

- apiGroups: [""]

resourceNames: ["kube-scheduler"]

resources: ["endpoints"]

verbs: ["delete", "get", "patch", "update"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["delete", "get", "list", "watch"]

- apiGroups: [""]

resources: ["bindings", "pods/binding"]

verbs: ["create"]

- apiGroups: [""]

resources: ["pods/status"]

verbs: ["patch", "update"]

- apiGroups: [""]

resources: ["replicationcontrollers", "services"]

verbs: ["get", "list", "watch"]

- apiGroups: ["app", "extensions"]

resources: ["replicasets"]

verbs: ["get", "list", "watch"]

- apiGroups: ["apps"]

resources: ["statefulsets"]

verbs: ["get", "list", "watch"]

- apiGroups: ["policy"]

resources: ["poddisruptionbudgets"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumeclaims", "persistentvolumes"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: stork-scheduler-role-binding

subjects:

- kind: ServiceAccount

name: stork-scheduler-account

namespace: kube-system

roleRef:

kind: ClusterRole

name: stork-scheduler-role

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

component: scheduler

tier: control-plane

name: stork-scheduler

name: stork-scheduler

namespace: kube-system

spec:

selector:

matchLabels:

component: scheduler

replicas: 3

template:

metadata:

labels:

component: scheduler

tier: control-plane

name: stork-scheduler

spec:

containers:

- command:

- /usr/local/bin/kube-scheduler

- --address=0.0.0.0

- --leader-elect=true

- --scheduler-name=stork

- --policy-configmap=stork-config

- --policy-configmap-namespace=kube-system

- --lock-object-name=stork-scheduler

image: gcr.io/google_containers/kube-scheduler-amd64:v1.10.4

livenessProbe:

httpGet:

path: /healthz

port: 10251

initialDelaySeconds: 15

name: stork-scheduler

readinessProbe:

httpGet:

path: /healthz

port: 10251

resources:

requests:

cpu: '0.1'

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "name"

operator: In

values:

- stork-scheduler

topologyKey: "kubernetes.io/hostname"

hostPID: false

serviceAccountName: stork-scheduler-account

Limitations

If a Portworx storage node goes down and cluster is still in quorum, a storageless node will not automatically replace the storage node.