Use Pure Storage Cloud Dedicated as Backend Storage

Pure Storage Cloud Dedicated (PSC Dedicated), is a storage solution from Pure Storage that delivers enterprise-grade block storage capabilities within both Microsoft Azure and Amazon Web Services (AWS). It enables consistent operations and seamless workload mobility across on-premises, AWS, and Azure environments.

Key features include:

- Data mobility for disaster recovery and cloud migration

- Built-in data services, including compression, deduplication, and snapshots

- High availability through deployment across multiple availability zones

- Cost efficiency via thin provisioning and flexible pricing models

This combination of unified management and robust fault tolerance makes PSC Dedicated well-suited for mission-critical applications, ensuring business continuity, data protection, and operational resilience.

Architecturally, PSC Dedicated is based on Pure Storage’s FlashArray technology and delivers high availability and performance through a software-defined design optimized for cloud environments. It maintains consistent IOPS, low latency, and built-in redundancy across cloud infrastructure. For more information, see the Pure Storage Cloud.

Before you begin preparing your environment, ensure that all system requirements are met.

Install PSC Dedicated

PX-CSI supports deploying PSC Dedicated on both Amazon Web Services (AWS) and Microsoft Azure. To deploy PSC Dedicated, refer to the following documentation:

Configure your PSC Dedicated

Before you install PX-CSI, verify network connectivity between your cluster nodes and PSC Dedicated.

- Ensure that each node can access the PSC Dedicated management IP address.

- Ensure your cluster has an operational PSC Dedicated with an existing data plane connectivity layout (iSCSI).

- Ensure that storage node iSCSI initiators are on the same VLAN as the PSC Dedicated iSCSI target ports.

- Obtain an API token for a user on your PSC Dedicated with at least

storage_adminpermissions. See your array documentation for instructions.

Configure the multipath.conf file

- PSC Dedicated and PX-CSI do not support user-friendly names. Set

user_friendly_namestonobefore installing PX-CSI to keep device names consistent. - Add

polling_interval 10as recommended in RHEL settings. This defines how often the system checks for path status updates. - To avoid interference from the

multipathdservice during PX-CSI volume operations, set the pxd device denylist rule.

Your /etc/multipath.conf file should follow this structure:

Set find_multipaths to no in the defaults section because each controller has only one iSCSI path.

blacklist {

devnode "^pxd[0-9]*"

devnode "^pxd*"

device {

vendor "VMware"

product "Virtual disk"

}

}

defaults {

polling_interval 10

find_multipaths no

}

devices {

device {

vendor "NVME"

product "Pure Storage FlashArray"

path_selector "queue-length 0"

path_grouping_policy group_by_prio

prio ana

failback immediate

fast_io_fail_tmo 10

user_friendly_names no

no_path_retry 0

features 0

dev_loss_tmo 60

}

device {

vendor "PURE"

product "FlashArray"

path_selector "service-time 0"

hardware_handler "1 alua"

path_grouping_policy group_by_prio

prio alua

failback immediate

path_checker tur

fast_io_fail_tmo 10

user_friendly_names no

no_path_retry 0

features 0

dev_loss_tmo 600

}

}

Configure udev rules

Configure queue settings with udev rules on all nodes. For recommended settings, see Applying Queue Settings with udev.

Apply multipath and udev configurations

Apply the multipath and udev configurations created in the previous sections so the changes take effect.

- OpenShift Container Platform

- Other Kubernetes platforms

Use a MachineConfig in OpenShift to apply multipath and udev configuration files consistently across all nodes.

-

Encode the configuration files in base64 format and add them to the MachineConfig, as shown in the following example:

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

creationTimestamp:

labels:

machineconfiguration.openshift.io/role: worker

name: <your-machine-config-name>

spec:

config:

ignition:

version: 3.2.0

storage:

files:

- contents:

source: data:text/plain;charset=utf-8;base64,<base64-encoded-multipath-conf>

filesystem: root

mode: 0644

overwrite: true

path: /etc/multipath.conf

- contents:

source: data:text/plain;charset=utf-8;base64,<base64-encoded-udev_conf>

filesystem: root

mode: 0644

overwrite: true

path: /etc/udev/rules.d/99-pure-storage.rules

systemd:

units:

- enabled: true

name: iscsid.service

- enabled: true

name: multipathd.service -

Apply the MachineConfig to your cluster:

oc apply -f <your-machine-config-name>.yaml

-

Update the

multipath.conffile as described in Configure themultipath.conffile and restart themultipathdservice on all nodes:systemctl restart multipathd.service -

Create the udev rules as described in Configure udev rules and apply them on all nodes:

udevadm control --reload-rules && udevadm trigger

Set up user access in PSC Dedicated

To establish secure communication between PX-CSI and PSC Dedicated, create a user account and generate an API token. This token allows PX-CSI to perform storage operations on behalf of the authorized user.

- PSC Dedicated without secure multi-tenancy

- PSC Dedicated with secure multi-tenancy

-

Create a user:

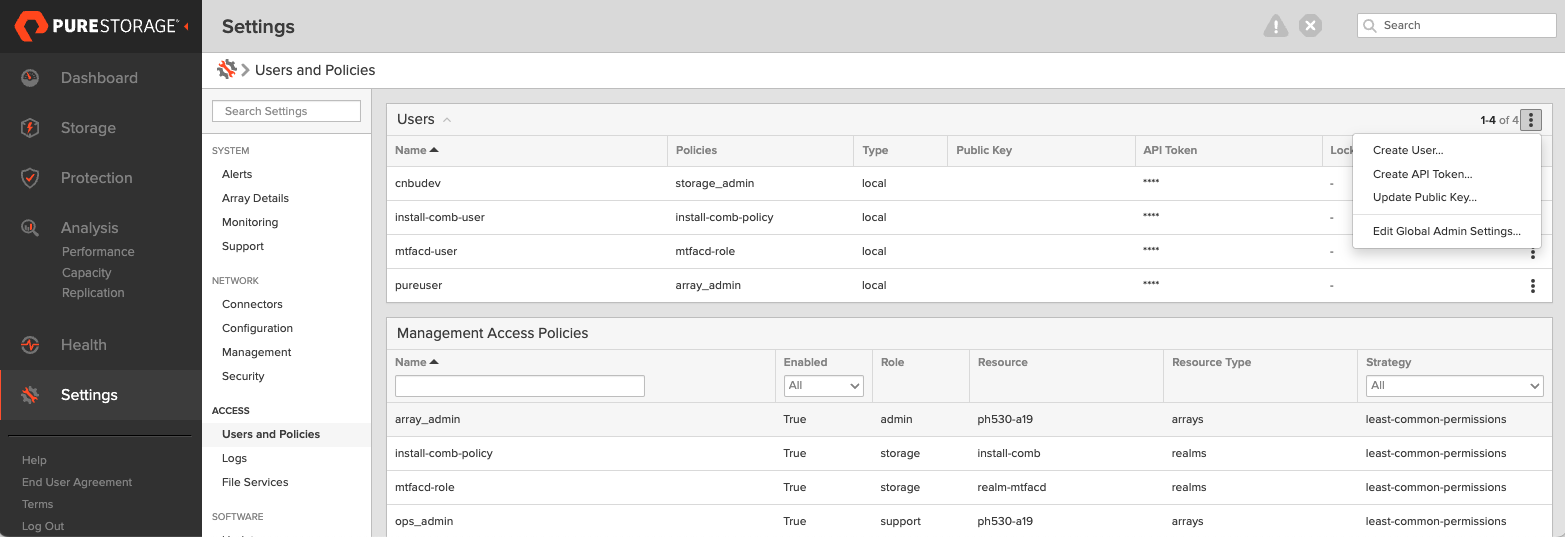

- In the PSC Dedicated dashboard, select Settings in the left pane.

- On the Settings page, select Access.

- In the Users section, select the vertical ellipsis in the top-right corner and choose Create User:

- In Create User, enter the details and set the role to Storage Admin.

- Select Create to add the user.

-

Generate an API token:

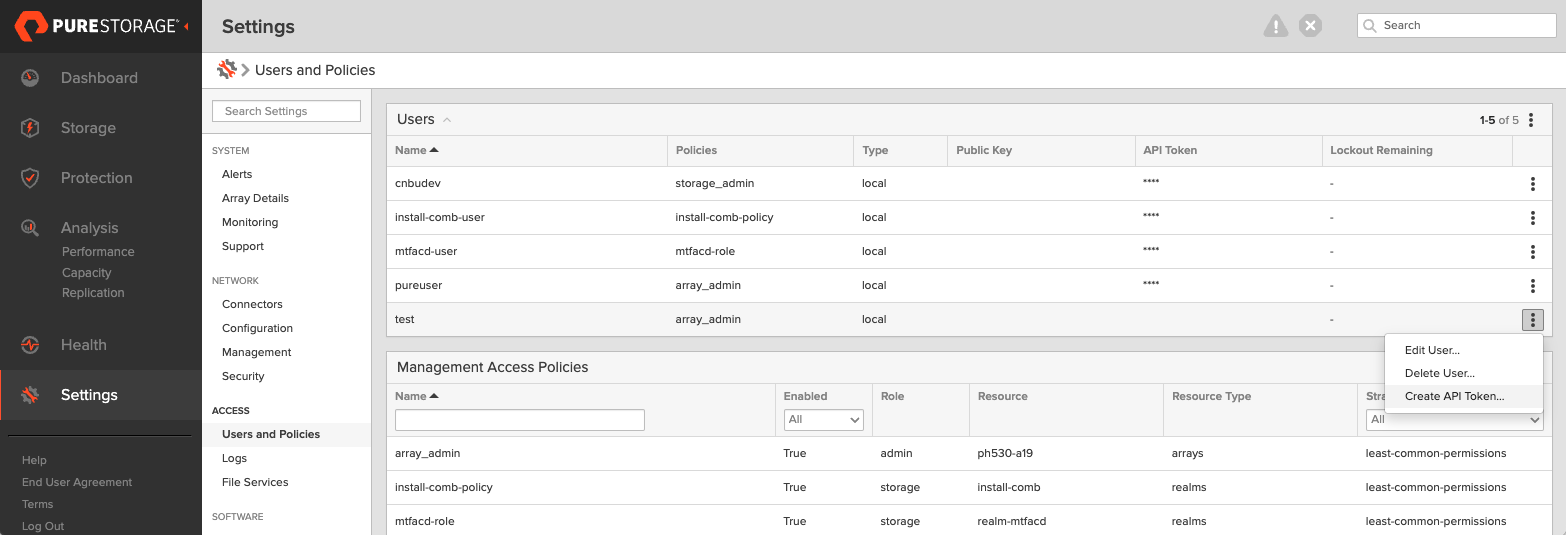

- Select the user in Users, open the vertical ellipsis for the username, and choose Create API Token:

- In API Token, leave Expires in blank if you want a token that never expires, then select Create.

- Save the token for later use.

- Select the user in Users, open the vertical ellipsis for the username, and choose Create API Token:

-

Create a realm for each customer. Volumes created by PX-CSI will be placed in this realm.

purerealm create <customer1-realm>Name Quota Limit

<customer1-realm> - -

Create a pod in the realm. A pod defines where volumes are placed.

purepod create <customer1-realm>::<fa-pod-name> -

Create a policy for the realm. Ensure you have administrative privileges.

purepolicy management-access create --realm <customer1-realm> --role storage --aggregation-strategy all-permissions <realm-policy>For basic privileges:

purepolicy management-access create --realm <customer1-realm> --role storage --aggregation-strategy least-common-permissions <realm-policy> -

Verify the policy.

purepolicy management-access listName Type Enabled Capability Aggregation Strategy Resource Name Resource Type

<realm-policy> admin-access True all all-permissions <customer1-realm> realms -

Create a user linked to the policy.

pureadmin create --access-policy <realm-policy> <psc-dedicated-user>Enter password:

Retype password:

Name Type Access Policy

<psc-dedicated-user> local <realm-policy> -

Sign in as the new user in the PSC Dedicated CLI.

-

Create an API token.

pureadmin create --api-token

Create the pure.json file

To integrate PX-CSI with PSC Dedicated, create a JSON configuration file named pure.json that contains information about your PSC Dedicated environment. Include management endpoints and the API token you generated.

- Management endpoints: URLs or IP addresses that PX-CSI uses to communicate with PSC Dedicated. In the PSC Dedicated dashboard, go to Settings > Network and note the IP addresses or hostnames of management interfaces (prefixed with vir, indicating virtual interfaces).

- API token: The token you generated in the previous section.

- Realm (secure multi-tenancy only): Realms define tenant boundaries. When multiple PSC Dedicated instances are attached to a cluster, specify a realm to isolate volumes per tenant.

- VLAN (only for VLAN binding): Specify the VLAN ID to which the host should be bound.

Use the information above to create a JSON file. Below is a template you can populate with your values:

You must enter the PSC Dedicated endpoint details in the FlashArray section of the pure.json file.

- PSC Dedicated without secure multi-tenancy

- PSC Dedicated with secure multi-tenancy

{

"FlashArrays": [

{

"MgmtEndPoint": "<psc-dedicated-management-endpoint>",

"APIToken": "<psc-dedicated-api-token>",

"VLAN": "<vlan-id>"

}

]

}

{

"FlashArrays": [

{

"MgmtEndPoint": "<first-psc-dedicated-management-endpoint1>",

"APIToken": "<first-psc-dedicated-api-token>",

"Realm": "<first-psc-dedicated-realm>",

"VLAN": "<vlan-id>"

}

]

}

(Optional) CSI topology feature

PX-CSI supports topology-aware storage provisioning. By specifying topology information (node, zone, or region), you can control where volumes are provisioned to meet availability, performance, and fault-tolerance requirements. See CSI topology.

To prepare your environment for topology-aware provisioning:

-

Edit

pure.jsonto define the topology for each PSC Dedicated instance. For details, seepure.jsonwith CSI topology. -

Label your Kubernetes nodes with values that match the labels in

pure.json. For example:kubectl label node <nodeName> topology.portworx.io/zone=zone-0

kubectl label node <nodeName> topology.portworx.io/region=region-0

Add PSC Dedicated configuration to a Kubernetes secret

To enable PX-CSI to access the PSC Dedicated configuration, add pure.json to a Kubernetes secret named px-pure-secret:

- OpenShift Container Platform

- Other Kubernetes platforms

oc create secret generic px-pure-secret --namespace <stc-namespace> --from-file=pure.json=<file path>

secret/px-pure-secret created

kubectl create secret generic px-pure-secret --namespace <stc-namespace> --from-file=pure.json=<file path>

secret/px-pure-secret created

Volume attachment limits

PX-CSI supports up to 512 volume attachments per node** when using PSC Dedicated, which communicates over the iSCSI transport. The effective limit depends on the Linux storage stack, host bus adapter (HBA), and driver configuration.

Before deploying PX-CSI, ensure that the operating system (OS) and HBAs are configured to support the number of attachments required for your workloads.

Use the following command to inspect the LUN limit on your nodes:

cat /sys/module/scsi_mod/parameters/max_luns

Verify the iSCSI connection with PSC Dedicated

If you use the iSCSI protocol, verify your setup:

-

Discover iSCSI targets from a node:

iscsiadm -m discovery -t st -p <flash-array-interface-endpoint>10.13.xx.xx0:3260,207 iqn.2010-06.com.purestorage:flasharray.xxxxxxx

10.13.xx.xx1:3260,207 iqn.2010-06.com.purestorage:flasharray.xxxxxxx -

Verify that each node has a unique initiator:

cat /etc/iscsi/initiatorname.iscsiInitiatorName=iqn.1994-05.com.redhat:xxxxx -

If initiator names are not unique, assign a new unique initiator name:

echo "InitiatorName=`/sbin/iscsi-iname`" > /etc/iscsi/initiatorname.iscsi -

Restart the iSCSI service to apply changes:

systemctl restart iscsid

Once you set up PSC Dedicated, storage operations such as creating or resizing a PVC, and taking snapshots are the same as on FlashArray. Refer to the FlashArray sections in this documentation for guidance on performing these tasks.