Install Portworx on Kubernetes with Pure Storage FlashArray

You can install Portworx with Pure Storage FlashArray as a cloud storage provider. This allows you to store your data on-premises with FlashArray while benefiting from Portworx cloud drive features, such as:

- Automatically provisioning block volumes

- Expanding a cluster by adding new drives or expanding existing ones

- Support for PX-Backup and Autopilot

Just like with other cloud providers, Portworx will create and manage the underlying storage pool volumes on the registered arrays.

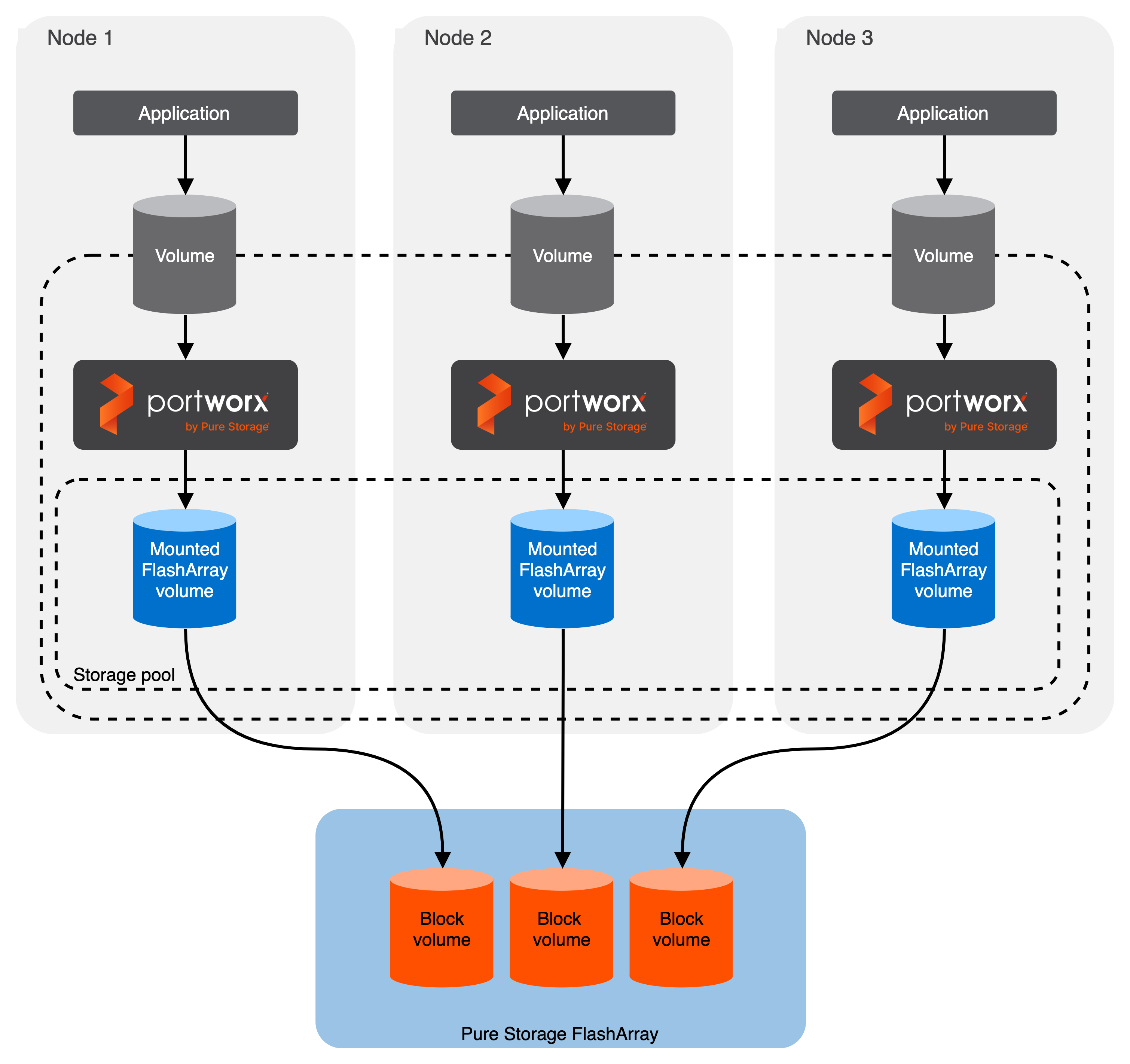

Architecture

- Portworx runs on each node and forms a storage pool based on configuration information provided in the storageCluster spec.

- When a user creates a PVC, Portworx provisions the volume from the storage pool.

- The PVCs consume space on the storage pool, and if space begins to run low, Portworx can add or expand drive space from FlashArray.

- If a node goes down for less than 2 minutes, Portworx will reattach FlashArray volumes when it recovers. If a node goes down for more than two minutes, a storageless node in the same zone will take up the volumes and assume the identity of the downed storage node.

Prerequisites

- Have an on-premise Kubernetes cluster with FlashArray.

- FlashArray must be running a minimum Purity//FA version of at least 4.8. Refer to the Supported models and versions topic for more information.

- The FlashArray should be time-synced with the same time service as the Kubernetes cluster.

- Install the latest Linux multipath software package on your operating system. This package must include

kpartx. Refer to the Linux recommended settings article of the Pure Storage documentation for recommendations. - Both multipath and iSCSI, if being used, should have their services enabled in

systemdso that they start after reboots. - If using Fibre Channel, install the latest Fibre Channel initiator software for your operating system.

- If using iSCSI, have the latest iSCSI initiator software for your operating system.

- If using multiple NICs to connect to an iSCSI host, configure these interfaces using the steps in the Create Additional Interfaces (Optional) section on the Configuring Linux Host for iSCSI with FlashArray page.

- You must have Helm installed on the client machine. For information on how to install Helm, see Installing Helm.

- Review the Helm compatibility matrix and the configurable parameters.

Configure your physical environment

Before you install Portworx, ensure that your physical network is configured appropriately and that you meet the prerequisites. You must provide Portworx with your FlashArray configuration details during installation.

- Each FlashArray management IP address can be accessed by each node.

- Your cluster contains an up-and-running FlashArray with an existing dataplane connectivity layout (iSCSI, Fibre Channel).

- If you're using iSCSI, the storage node iSCSI initiators are on the same VLAN as the FlashArray iSCSI target ports.

- If you are using multiple network interface cards (NICs) to connect to an iSCSI host, then all of them must be accessible from the FlashArray management IP address.

- If you're using Fibre Channel, the storage node Fibre Channel WWNs have been correctly zoned to the FlashArray Fibre Channel WWN ports.

- You have an API token for a user on your FlashArray with at least

storage_adminpermissions. Check the documentation on your device for information on generating an API token.

(Optional) Set iSCSI interfaces on FlashArray

If you are using iSCSI protocol, you can its interfaces on FlashArray using the following steps:

- Run the following command to get the available iSCSI interface within your environment:

You can use the output in the next step.

iscsiadm -m iface - Run the following command to specify which network interfaces on the FlashArray system are allowed to handle iSCSI traffic. Replace

<interface-value>by the value you retried in the previous step:

pxctl cluster options update --flasharray-iscsi-allowed-ifaces <interface-value>

Configure your software environment

Configure your software environment within a computing infrastructure. It involves preparing both the operating system and the underlying network and storage configurations.

Follow the instructions below to disable secure boot mode and configure the multipath.conf file appropriately. These configurations ensure that the system's software environment is properly set up to allow Portworx to interact correctly with the hardware components, like storage devices (using protocols such as iSCSI or Fibre Channel), and to function correctly within the network infrastructure.

Disable secure boot mode

Portworx requires the secure boot mode to be disabled to ensure it can operate without restrictions. Here's how to disable secure boot mode across different platforms:

- RHEL/CentOS

- VMware

For REHL/CentOS you can perform the following steps to check and disable the secure boot mode:

-

Check the status of secure boot mode:

/usr/bin/mokutil --sb-state -

If secure boot is enabled, disable it:

/usr/bin/mokutil --disable-validation -

Apply changes by rebooting your system:

reboot

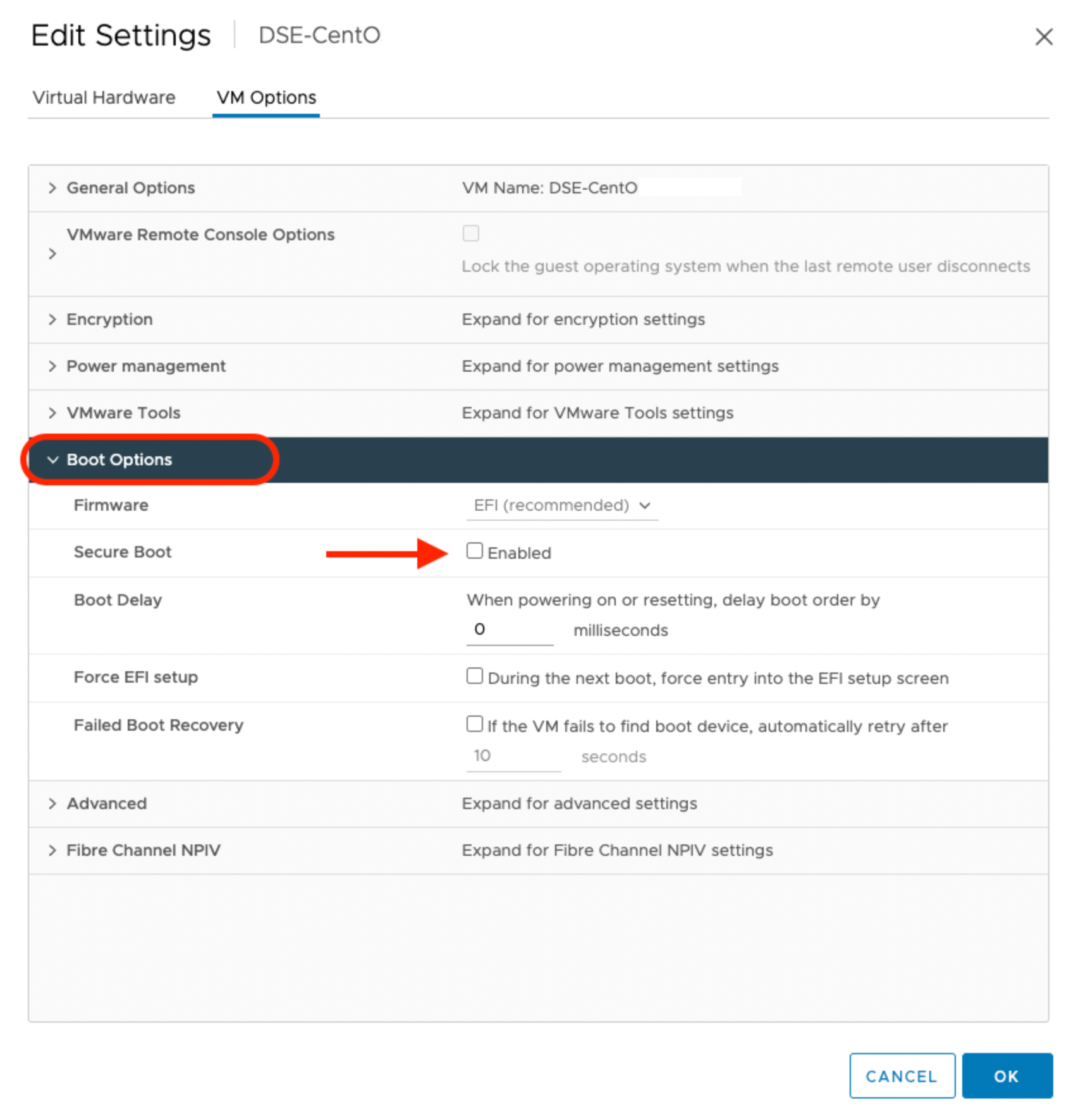

For VMware, navigate to the Edit Setting window of the virtual machine on which you are planning to deploy Portworx. Ensure that the checkbox against the Secure Boot option under VM Options is not selected, as shown in the following screenshot:

Verify the status of the secure boot mode

Run the following command to ensure that the secure boot mode is off:

/usr/bin/mokutil --sb-state

SecureBoot disabled

Configure the multipath.conf file

- For

defaults:- FlashArray and Portworx does not support user friendly names, disable it and set it to

nobefore installing Portworx on your cluster. This ensures Portworx and FlashArray use consistent device naming conventions. - Add

polling 10as per the RHEL Linux recommended settings. This defines how often the system checks for path status updates.

- FlashArray and Portworx does not support user friendly names, disable it and set it to

- To prevent any interference from

multipathdservice on Portworx volume operations, set the pxd device denylist rule.

Your multipath.conf file should resemble the following structure:

- RHEL/CentOS

- Ubuntu

defaults {

user_friendly_names no

find_multipaths on

enable_foreign "^$"

polling_interval 10

}

devices {

device {

vendor "NVME"

product "Pure Storage FlashArray"

path_selector "queue-length 0"

path_grouping_policy group_by_prio

prio ana

failback immediate

fast_io_fail_tmo 10

user_friendly_names no

no_path_retry 0

features 0

dev_loss_tmo 60

}

device {

vendor "PURE"

product "FlashArray"

path_selector "service-time 0"

hardware_handler "1 alua"

path_grouping_policy group_by_prio

prio alua

failback immediate

path_checker tur

fast_io_fail_tmo 10

user_friendly_names no

no_path_retry 0

features 0

dev_loss_tmo 600

}

}

blacklist_exceptions {

property "(SCSI_IDENT_|ID_WWN)"

}

blacklist {

devnode "^pxd[0-9]*"

devnode "^pxd*"

device {

vendor "VMware"

product "Virtual disk"

}

}

defaults {

user_friendly_names no

find_multipaths on

}

devices {

device {

vendor "NVME"

product "Pure Storage FlashArray"

path_selector "queue-length 0"

path_grouping_policy group_by_prio

prio ana

failback immediate

fast_io_fail_tmo 10

user_friendly_names no

no_path_retry 0

features 0

dev_loss_tmo 60

}

device {

vendor "PURE"

product "FlashArray"

path_selector "service-time 0"

hardware_handler "1 alua"

path_grouping_policy group_by_prio

prio alua

failback immediate

path_checker tur

fast_io_fail_tmo 10

user_friendly_names no

no_path_retry 0

features 0

dev_loss_tmo 600

}

}

blacklist {

devnode "^pxd[0-9]*"

devnode "^pxd*"

device {

vendor "VMware"

product "Virtual disk"

}

}

Deploy Portworx

Once you've configured your environment and ensured that you meet the prerequisites, you're ready to deploy Portworx.

Create a JSON configuration file

Create a JSON file named pure.json that contains your FlashArray information. This file contains the essential FlashArray configuration information, such as management endpoints and API tokens, that Portworx needs to communicate with and manage the FlashArray storage devices:

{

"FlashArrays": [

{

"MgmtEndPoint": "<first-fa-management-endpoint>",

"APIToken": "<first-fa-api-token>"

}

]

}

You can add FlashBlade configuration information to this file if you're configuring both FlashArray and FlashBlade together. Refer to the JSON file reference for more information.

Create a Kubernetes Secret

The specific name px-pure-secret is required so that Portworx can correctly identify and access the Kubernetes secret upon startup. This secret securely stores the FlashArray configuration details and allows Portworx to access this information within the Kubernetes environment.

Enter the following kubectl create command to create a Kubernetes secret called px-pure-secret:

kubectl create secret generic px-pure-secret --namespace <stc-namespace> --from-file=pure.json=<file path>

secret/px-pure-secret created

Install Portworx using Helm

By default, Portworx is installed in the kube-system namespace. If you want to install it in a different namespace, use the -n <namespace> flag. For this example we will deploy Portworx in the portworx namespace.

-

To install Portworx, add the

portworx/helmrepository to your local Helm repository.helm repo add portworx https://raw.githubusercontent.com/portworx/helm/master/stable/"portworx" has been added to your repositories -

Verify that the repository has been successfully added.

helm repo listNAME URL

portworx https://raw.githubusercontent.com/portworx/helm/master/stable/ -

Create a

px_install_values.yamlfile and add the following parameters.openshiftInstall: true

drives: size=150

envs:

- name: PURE_FLASHARRAY_SAN_TYPE

value: ISCSI -

In many cases, you may want to customize Portworx configurations, such as enabling monitoring or specifying specific storage devices. You can pass the custom configuration to the

px_install_values.yamlyaml file.note- You can refer to the Portworx Helm chart parameters for a list of configurable parameters and values.yaml file for configuration file template.

- The default clusterName is

mycluster. However, it's recommended to change it to a unique identifier to avoid conflicts in multi-cluster environments.

-

Install Portworx Enterprise using the following command:

noteTo install a specific version of Helm chart, you can use the

--versionflag. Example:helm install <px-release> portworx/portworx --version <helm-chart-version>.helm install <px-release> portworx/portworx -n <portworx> -f px_install_values.yaml --debug -

You can check the status of your Portworx installation.

helm status <px-release> -n <portworx>NAME: px-release

LAST DEPLOYED: Thu Sep 26 05:53:17 2024

NAMESPACE: portworx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Your Release is named "px-release"

Portworx Pods should be running on each node in your cluster.

Portworx would create a unified pool of the disks attached to your Kubernetes nodes.

No further action should be required and you are ready to consume Portworx Volumes as part of your application data requirements.

Update Portworx configuration using Helm

If you need to update the configuration of Portworx, you can modify the parameters in the px_install_values.yaml file specified during the Helm installation. This allows you to change the values of configuration parameters.

-

Create or edit the

px_install_values.yamlfile to update the desired parameters.vim px_install_values.yamlmonitoring:

telemetry: false

grafana: true -

Apply the changes using the following command:

helm upgrade <px-release> portworx/portworx -n <portworx> -f px_install_values.yamlRelease "px-release" has been upgraded. Happy Helming!

NAME: px-release

LAST DEPLOYED: Thu Sep 26 06:42:20 2024

NAMESPACE: portworx

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

Your Release is named "px-release"

Portworx Pods should be running on each node in your cluster.

Portworx would create a unified pool of the disks attached to your Kubernetes nodes.

No further action should be required and you are ready to consume Portworx Volumes as part of your application data requirements. -

Verify that the new values have taken effect.

helm get values <px-release> -n <portworx>You should see all the custom configurations passed using the

px_install_values.yamlfile.