Concepts

Overview

Apache Kafka is an open-source, distributed event streaming platform. It facilitates high-throughput, scalable messaging architectures and provides native partitioning and replication. With PDS, users can leverage core capabilities of Kubernetes to deploy and manage their Kafka clusters using a cloud-native, container-based model.

PDS follows the release lifecycle of Apache, meaning new releases are available on PDS shortly after they have become GA. Likewise, older versions of Kafka are removed once they have reached end-of-life. The list of currently supported Kafka versions can be found here.

Like all data services in PDS, Kafka deployments run within Kubernetes. PDS includes a component called the PDS Deployments Operator which manages the deployment of all PDS data services, including Kafka. The operator extends the functionality of Kubernetes by implementing a custom resource called kafka. The kafka resource type represents a Kafka cluster allowing standard Kubernetes tools to be used to manage Kafka clusters, including scaling, monitoring, and upgrading.

You can learn more about the PDS architecture here.

Clustering

Kafka is a distributed system meant to be run as a cluster of individual nodes, called brokers. This allows Kafka to be deployed in a fault-tolerant, highly available manner.

Although PDS allows Kafka to be deployed with any number of nodes, high availability can only be achieved when running with three or more nodes. Smaller clusters should only be considered in development environments.

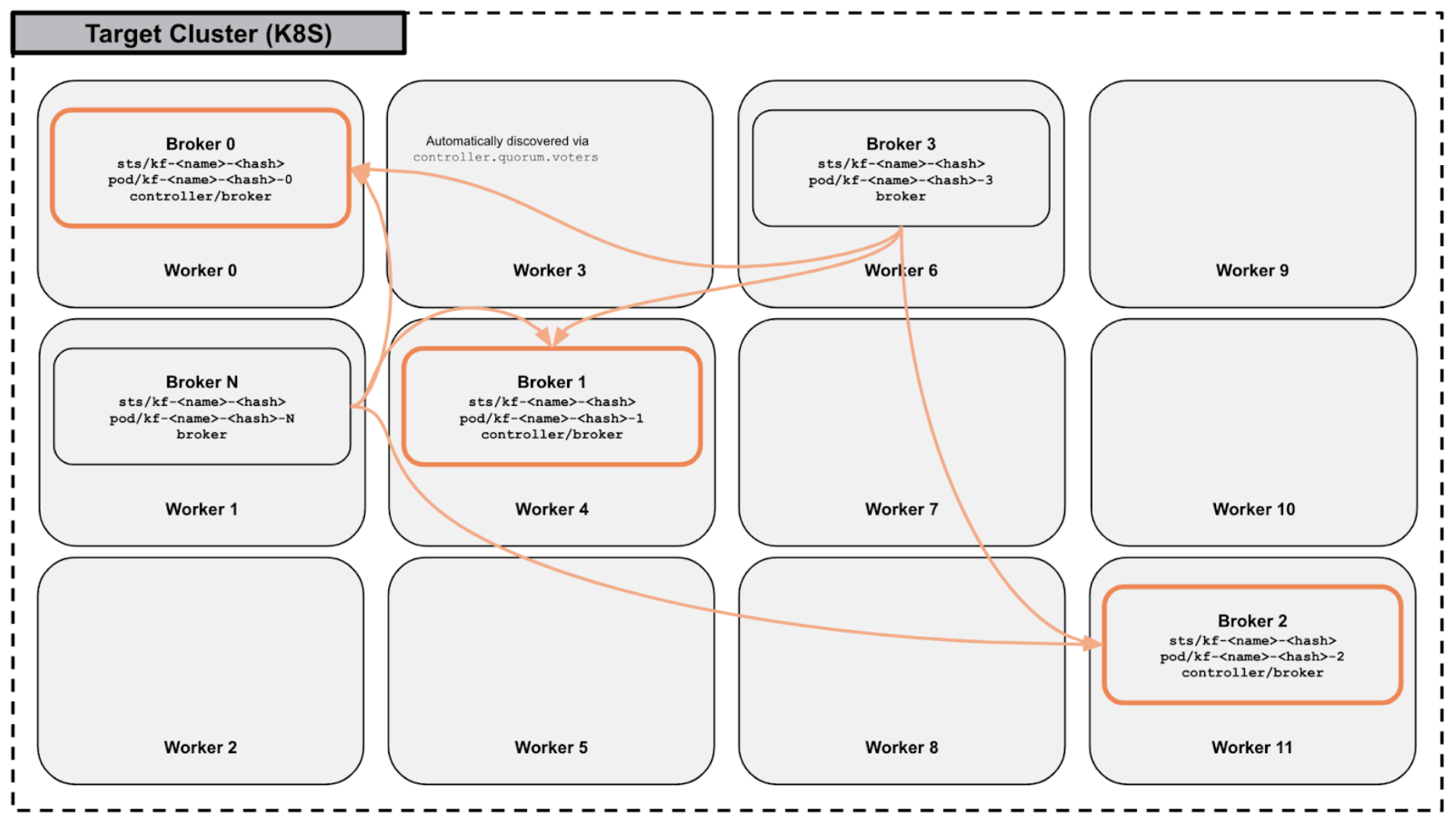

When deployed as a multi-node cluster, individual brokers, deployed as pods within a statefulSet, automatically discover each other to form a cluster. Node discovery within a Kafka cluster is also automatic when pods are deleted and recreated or when additional nodes are added to the cluster via horizontal scaling.

PDS leverages the partition tolerance of Kafka by spreading Kafka nodes across Kubernetes worker nodes when possible. PDS utilizes the Stork, in combination with Kubernetes storageClasses, to intelligently schedule pods. By provisioning storage from different worker nodes, and then scheduling pods to be hyper-converged with the volumes, PDS deploys Kafka clusters in a way that maximizes fault tolerance, even if entire worker nodes or availability zones are impacted.

Refer to the PDS architecture to learn more about Stork and scheduling.

Metadata

PDS allows you to deploy Kafka clusters that use KRaft for metadata management.

KRaft

Clusters deployed without the explicit configuration of a backing Zookeeper cluster will automatically be configured to use KRaft for metadata management.

KRaft-based Kafka clusters that have three or more brokers will have exactly three brokers that also participate as controllers. Clusters with less than three brokers will have all brokers participating as controllers.

Replication

Application Replication

Kafka can natively distribute data over brokers in a cluster through a process known as partitioning.

Both the number of partitions and the number of partition replicas can be configured on a per-topic basis. Commonly, users choose a replication factor of three to ensure that Kafka will replicate each partition across three nodes in the cluster.

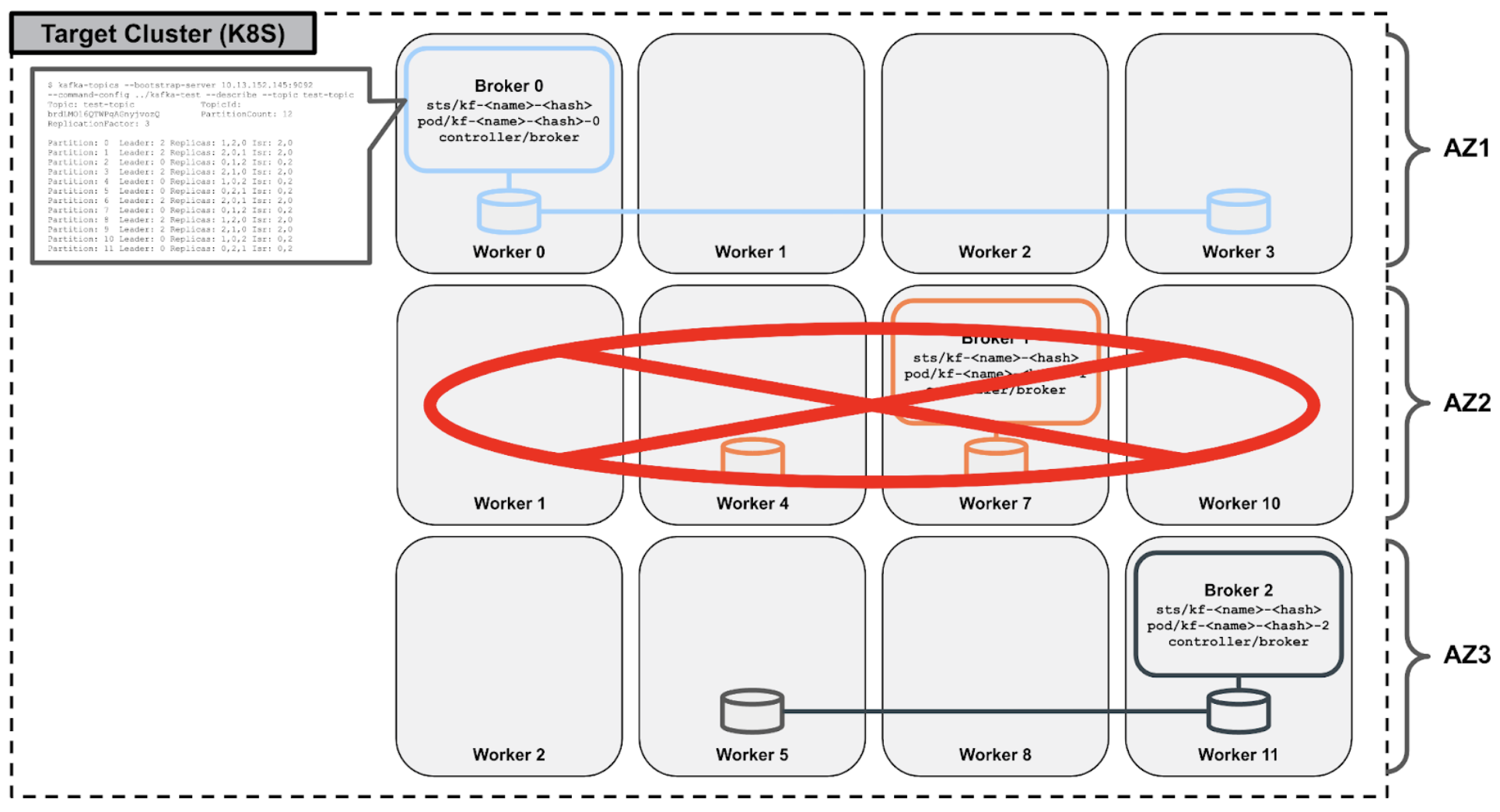

Application replication is used in Kafka to accomplish load balancing and high availability. With data replicated to multiple nodes, more nodes are able to respond to requests for data. Likewise, in the event of node failure, the cluster may still be available to serve client requests, depending on the configuration of the client.

Storage Replication

PDS takes data replication further. Storage in PDS is provided by Portworx Enterprise, which itself allows data to be replicated at the storage level.

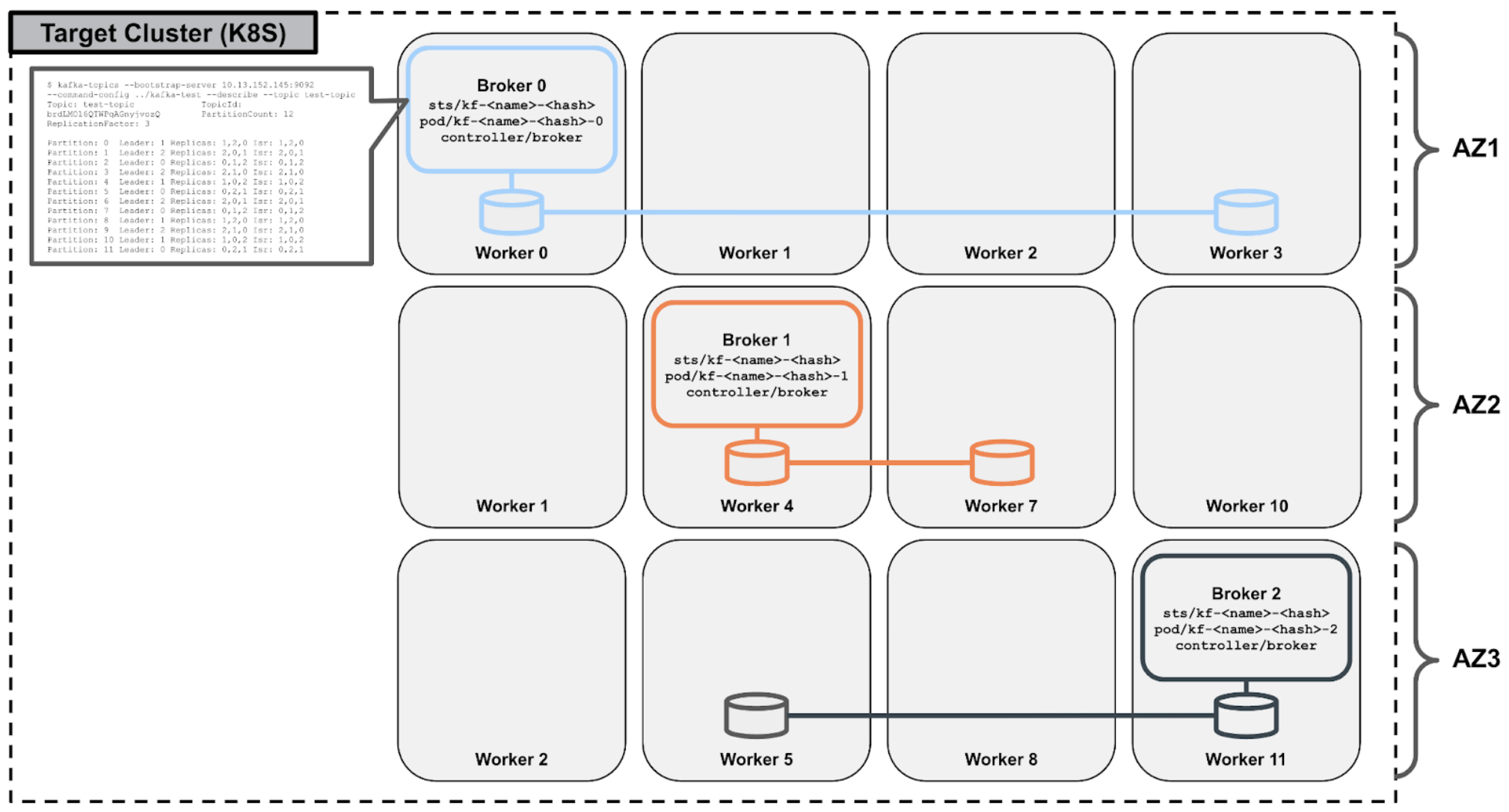

Each Kafka node in PDS is configured to store data to a persistentVolume which is provisioned by Portworx Enterprise. These Portworx volumes can, in turn, be configured to replicate data to multiple volume replicas. It is recommended to use two volume replicas in PDS in combination with application replication in Kafka.

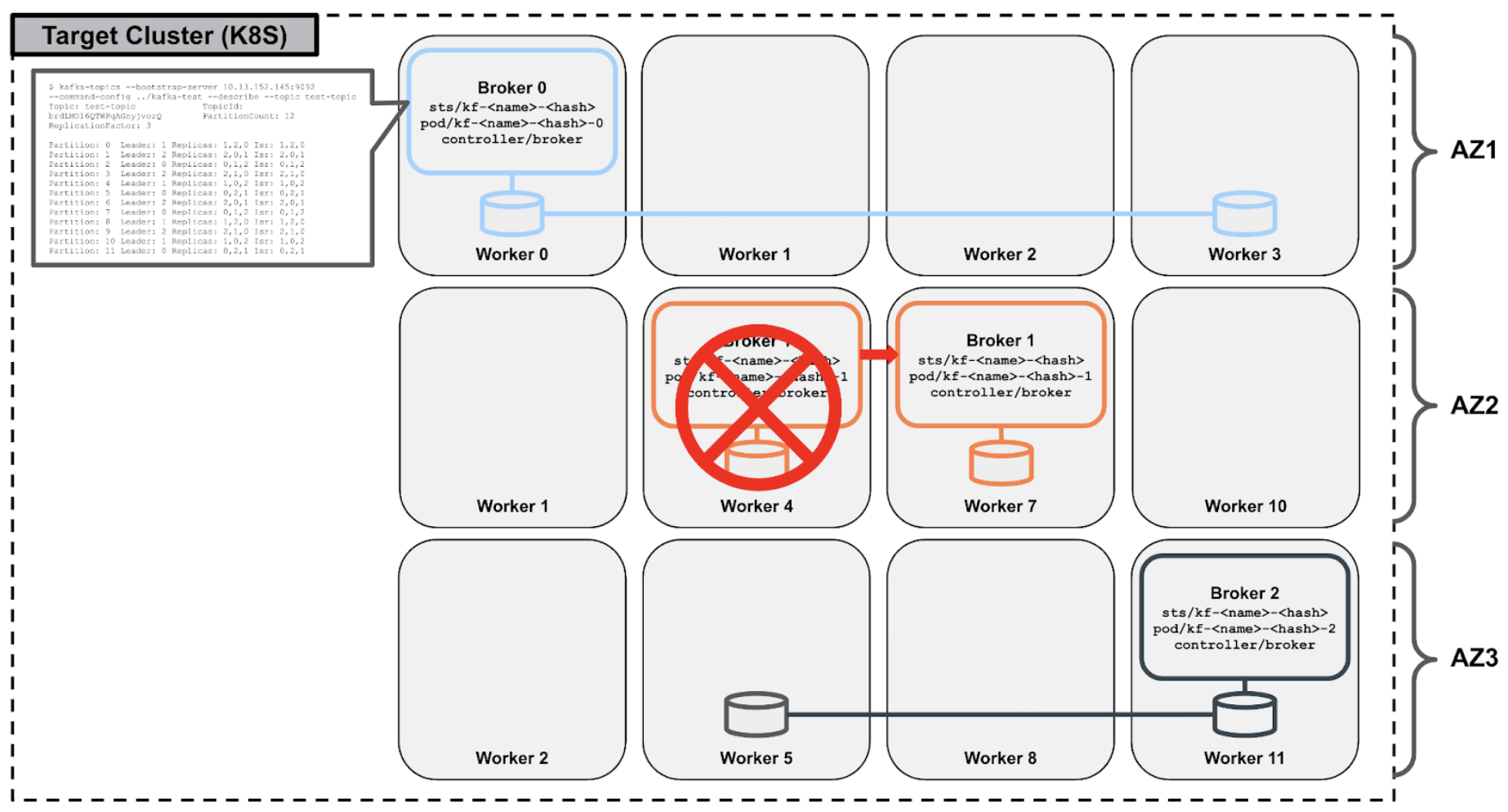

While the additional level of replication will result in write amplification, the storage-level replication solves a different problem than what is accomplished by application-level replication. Specifically, storage-level replication reduces the amount of downtime in failure scenarios. That is, it reduces Recovery Time Objective (RTO).

Portworx volume replicas are able to ensure that data are replicated to different Kubernetes worker nodes or even different availability zones. This maximizes the ability of the Kubernetes API scheduler to schedule Kafka pods.

For example, in cases where Kubernetes worker nodes are unschedulable, pods can be scheduled on other worker nodes where data already exist. What’s more is that pods can start instantly and service traffic immediately without waiting for Kafka to replicate data to the pod.

Reference Architecture

For illustrative purposes, consider the following availability zone-aware Kafka and Portworx storage topology:

A host and/or storage failure results in the immediate rescheduling of the Kafka broker to a different worker node within the same availability zone. Stork can intelligently select the new worker node during the rescheduling process such that it is a worker node that contains a replica of the broker’s the underlying Portworx volume, maintaining full topic replication while ensuring hyperconvergence for optimal performance:

Additionally, in the event that an entire availability zone is unavailable, Kafka’s native partition replication capability ensures that client applications are not impacted.

Configuration

Broker configurations can be tuned by specifying setting-specific environment variables within the deployment’s application configuration template.

You can learn more about application configuration templates here. The list of all broker configurations that may be overridden is itemized in the Kafka service’s reference documentation here.

Scaling

Because of the ease with which databases can be deployed and managed in PDS, it is common for you to deploy many of them. Likewise, because it is easy to scale databases in PDS, it is common for you to start with smaller clusters and then add resources when needed. PDS supports both vertical scaling (CPU/memory), as well as horizontal scaling (nodes) of Kafka clusters.

Vertical Scaling

Vertical scaling refers to the process of adding hardware resources to (scaling up) or removing hardware resources from (scaling down) database nodes. In the context of Kubernetes and PDS, these hardware resources are virtualized CPU and memory.

PDS allows Kafka brokers to be dynamically reprovisioned with additional or reduced CPU and/or memory. These changes are applied in a rolling fashion across each of the nodes in the Kafka cluster. For multi-node Kafka clusters with replicated topics, this operation is non-disruptive to client applications.

Horizontal Scaling

Horizontal scaling refers to the process of adding database nodes to (scaling out) or removing database nodes from (scaling in) a cluster. Currently, PDS supports only scaling out of clusters. This is accomplished by adding additional pods to the existing Kafka cluster by updating the replica count of the statefulSet.

The addition of nodes is not accompanied by the rebalancing of existing topics. New topics that are created after the addition of nodes will automatically generate partition assignments that make use of new brokers, however, users are encouraged to manually redistribute the partition of existing topics using the upstream kafka-reassign-partitions.sh utility.

Connectivity

PDS manages pod-specific services whose type is determined based on user input (currently, only LoadBalancer and ClusterIP types are supported). This ensures a stable IP address beyond the lifecycle of the pod. For each of these services, an accompanying DNS record will be managed automatically by PDS.

Connection information provided through the PDS UI will reflect this by providing users with an array of broker endpoints. Though clients need only connect to one broker to bootstrap connection information for the totality of the cluster, specifying all individual broker endpoints will mitigate connectivity issues in the event of any individual broker being unavailable.

By default, Kafka cluster instances deployed via PDS are configured with SASL/PLAIN authentication. Administrators, producers, and consumers, thus, must be configured with the following properties:

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="xxx" password="yyy";

Instance-specific authentication information, including username and password, can be retrieved from the PDS UI within the instance’s connection details page.

Endpoints

| Service Name | Details |

|---|---|

kf-<name>-<namespace>-<pod-id>-vip | Endpoint for each pod |

kf-<name>-<namespace>-rr | Round Robin Endpoint to all pods |

Backups

PDS does not support backups with Kafka. Users seeking to backup log messages ingested into Kafka may consider using one or more sink connectors to replicate data to alternative data stores, such as S3 or HDFS.

Monitoring

PDS collects metrics exposed via Kafka’s JMX server component and dispatches them to the PDS control plane. While the PDS UI includes dashboards that report on subset of these metrics to display high-level health monitors, all collected metrics are made queryable via a Prometheus endpoint. You can learn more about integrating the PDS control plane’s Prometheus endpoint into your existing monitoring environment here. The list of all Kafka metrics collected and made available by PDS is itemized in the Kafka service’s reference documentation here.