Monitor Clusters on Kubernetes

To ensure your storage infrastructure's health, performance, and reliability, it's crucial to monitor your Portworx cluster. The monitoring approach varies depending on your deployment environment. For most setups, Portworx's integrated Prometheus and Grafana deployment runs by default.

Monitoring your Portworx cluster in a Kubernetes environment involves leveraging technologies like Prometheus, Alertmanager, and Grafana. These tools help in collecting data, managing alerts, and visualizing metrics.

- Prometheus: Collects metrics, which are essential for Autopilot to identify and respond to conditions like storage capacity issues, enabling automatic PVC expansion and storage pool scaling.

- Alertmanager: Manages alerts, integrating with Autopilot for timely notifications about critical cluster conditions.

- Grafana: Visualizes data from Prometheus in an easy-to-understand format, aiding in quick decision-making for cluster management.

Each tool plays a vital role in ensuring efficient, automated monitoring and response within your cluster.

By default, Portworx deploys PX Prometheus for monitoring. Starting with Operator version 25.5.0, you can also use an external Prometheus instance.

Configure Prometheus

You can monitor your Portworx cluster using Prometheus. Select a tab based on the Prometheus instance you want to use.

- PX Prometheus

- External Prometheus

-

Set

spec.monitoring.prometheus.enabled: truein theStorageClusterspec. -

Verify that Prometheus pods are running by entering the following command in the namespace where you deployed Portworx:

kubectl -n kube-system get pods -A | grep -i prometheuskube-system prometheus-px-prometheus-0 2/2 Running 0 59m

kube-system px-prometheus-operator-59b98b5897-9nwfv 1/1 Running 0 60m -

Verify that the Prometheus

px-prometheusandprometheus-operatedservices exist:kubectl -n kube-system get service | grep -i prometheusprometheus-operated ClusterIP None <none> 9090/TCP 63m

px-prometheus ClusterIP 10.99.61.133 <none> 9090/TCP 63m

To monitor Portworx using an external Prometheus instance, disable PX Prometheus and enable exportMetrics.

-

In the

StorageClusterspec, configure the following:spec:

monitoring:

prometheus:

enabled: false

exportMetrics: true -

Ensure that you have a

ServiceMonitornamedportworxexists in the namespace where you deployed Portworx :kubectl get servicemonitor -n <portworx>NAME AGE

portworx 18h -

Ensure your external Prometheus instance is configured to discover

ServiceMonitorresources across namespaces.

In the Prometheus CRD, set the following:serviceMonitorNamespaceSelector: {} -

Verify that the Prometheus Operator is running and scraping targets:

kubectl get pods -n <external-prometheus-namespace>

After configuring an external Prometheus, you must specify its endpoint in the Autopilot configuration. For more information, see Autopilot Install and Setup.

Set up Alertmanager

Prometheus Alertmanager handles alerts sent from the Prometheus server based on rules you set. If any Prometheus rule is triggered, Alertmanager sends a corresponding notification to the specified receivers. You can configure these receivers using an Alertmanager config file. Perform the following steps to configure and enable Alertmanager:

-

Create a valid Alertmanager configuration file and name it

alertmanager.yaml. The following is a sample for Alertmanager, and the settings used in your environment may be different:global:

# The smarthost and SMTP sender used for mail notifications.

smtp_smarthost: 'smtp.gmail.com:587'

smtp_from: 'username@company.com'

smtp_auth_username: "username@company.com"

smtp_auth_password: 'xyxsy'

route:

group_by: [Alertname]

# Send all notifications to me.

receiver: email-me

receivers:

- name: email-me

email_configs:

- to: username@company.com

from: username@company.com

smarthost: smtp.gmail.com:587

auth_username: "username@company.com"

auth_identity: "username@company.com"

auth_password: "username@company.com" -

Create a secret called

alertmanager-portworxin the same namespace as your StorageCluster object:kubectl -n kube-system create secret generic alertmanager-portworx --from-file=alertmanager.yaml=alertmanager.yaml -

Edit your StorageCluster object to enable Alertmanager:

kubectl -n kube-system edit stc <px-cluster-name>apiVersion: core.libopenstorage.org/v1

kind: StorageCluster

metadata:

name: portworx

namespace: kube-system

monitoring:

prometheus:

enabled: true

exportMetrics: true

alertManager:

enabled: true -

Verify that the Alertmanager pods are running using the following command:

kubectl -n kube-system get pods | grep -i alertmanageralertmanager-portworx-0 2/2 Running 0 4m9s

alertmanager-portworx-1 2/2 Running 0 4m9s

alertmanager-portworx-2 2/2 Running 0 4m9snoteTo view the complete list of out-of-the-box default rules, see step 7 below.

Access the Alertmanager UI

To access the Alertmanager UI and view the Alertmanager Status and alerts, you need to set up port forwarding and browse to the specified port. In this example, port forwarding is provided for ease of access to the Alertmanager service from your local machine using the port 9093.

-

Set up port forwarding:

kubectl -n kube-system port-forward service/alertmanager-portworx --address=<masternodeIP> 9093:9093 -

Access Prometheus UI by browsing to

http://<masternodeIP>:9093/#/status

Portworx Central on-premises includes Grafana and Portworx dashboards natively, which you can use to monitor your Portworx cluster. Refer to the Portworx Central documentation for further details.

Access the Prometheus UI

To access the Prometheus UI to view Status, Graph and default Alerts, you also need to set up port forwarding and browse to the specified port. In this example, Port forwarding is provided for ease of access to the Prometheus UI service from your local machine using the port 9090.

-

Set up port forwarding:

kubectl -n kube-system port-forward service/px-prometheus 9090:9090

-

Access the Prometheus UI by browsing to

http://localhost:9090/alerts.

View provided Prometheus rules

To view the complete list of out-of-the-box default rules used for event notifications, perform the following steps.

-

Get the Prometheus rules:

kubectl -n kube-system get prometheusrulesNAME AGE

portworx 46d -

Save the Prometheus rules to a YAML file:

kubectl -n kube-system get prometheusrules portworx -o yaml > prometheusrules.yaml -

View the contents of the file:

cat prometheusrules.yaml

Configure Grafana

You can connect to Prometheus using Grafana to visualize your data. Grafana is a multi-platform open source analytics and interactive visualization web application. It provides charts, graphs, and alerts.

-

Enter the following commands to download the Grafana dashboard and datasource configuration files:

wget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/grafana-dashboard-config.yamlwget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/grafana-datasource.yaml -

Create a configmap for the dashboard and data source:

kubectl -n <px-namespace> create configmap grafana-dashboard-config --from-file=grafana-dashboard-config.yamlkubectl -n <px-namespace> create configmap grafana-source-config --from-file=grafana-datasource.yaml -

Download and install Grafana dashboards using the following commands:

wget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/portworx-cluster-dashboard.json

wget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/portworx-node-dashboard.json

wget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/portworx-volume-dashboard.json

wget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/portworx-performance-dashboard.json

wget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/portworx-etcd-dashboard.json

# Optional: Following files are required only if you need to monitor API requests sent to FlashArray.

wget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/fa-apis-dashboard1.json

wget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/fa-apis-dashboard2.json

# Optional: Following file is required only if you use Stork for DR/migrations

wget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/portworx-dr-dashboard.jsonkubectl -n <px-namespace> create configmap grafana-dashboards \

--from-file=portworx-cluster-dashboard.json \

--from-file=portworx-performance-dashboard.json \

--from-file=portworx-node-dashboard.json \

--from-file=portworx-volume-dashboard.json \

--from-file=portworx-etcd-dashboard.json \

# Optional: Following dashboards are required only if you need to monitor API requests sent to FlashArray.

--from-file=fa-apis-dashboard1.json \

--from-file=fa-apis-dashboard2.json \

# Optional: Following dashboard is required only if you use Stork for DR/migrations

--from-file=portworx-dr-dashboard.json -

Enter the following command to download and install the Grafana YAML file:

kubectl -n <px-namespace> apply -f https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/grafana.yamlnoteFor the SUSE Linux Micro distribution, download the grafana.yaml file using the command

wget https://docs.portworx.com/portworx-enterprise/samples/k8s/pxc/grafana.yaml. We recommend to update the Grafana image version to9.5.21in the downloaded file and use this file name in the command above. -

Verify if the Grafana pod is running using the following command:

kubectl -n <px-namespace> get pods | grep -i grafanagrafana-7d789d5cf9-bklf2 1/1 Running 0 3m12s -

Access Grafana by setting up port forwarding and browsing to the specified port. In this example, port forwarding is provided for ease of access to the Grafana service from your local machine using the port 3000:

kubectl -n <px-namespace> port-forward service/grafana 3000:3000 -

Navigate to Grafana by browsing to

http://localhost:3000. -

Enter the default credentials to log in.

- login:

admin - password:

admin

- login:

Install Node Exporter

After you have configured Grafana, install the Node Exporter binary. For Portworx, Node Exporter collects key metrics such as Network Sent/Received, Volume, Latency, Input/Output Operations per Second (IOPS), and Throughput, which Grafana can visualize to monitor these resources.

The following DaemonSet will be running in the kube-system namespace.

The examples below use the kube-system namespace, you should update this to the correct namespace for your environment. Be sure to install in the same namespace where Prometheus and Grafana are running.

-

Install node-exporter via DaemonSet by creating a YAML file named

node-exporter.yaml:apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

name: node-exporter

namespace: kube-system

spec:

selector:

matchLabels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

template:

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

spec:

containers:

- args:

- --path.sysfs=/host/sys

- --path.rootfs=/host/root

- --no-collector.wifi

- --no-collector.hwmon

- --collector.filesystem.ignored-mount-points=^/(dev|proc|sys|var/lib/docker/.+|var/lib/kubelet/pods/.+)($|/)

- --collector.netclass.ignored-devices=^(veth.*)$

name: node-exporter

image: prom/node-exporter

ports:

- containerPort: 9100

protocol: TCP

resources:

limits:

cpu: 250m

memory: 180Mi

requests:

cpu: 102m

memory: 180Mi

volumeMounts:

- mountPath: /host/sys

mountPropagation: HostToContainer

name: sys

readOnly: true

- mountPath: /host/root

mountPropagation: HostToContainer

name: root

readOnly: true

volumes:

- hostPath:

path: /sys

name: sys

- hostPath:

path: /

name: root -

Apply the object using the following command:

kubectl apply -f node-exporter.yaml -n kube-systemdaemonset.apps/node-exporter created

Create a service

Kubernetes service will connect a set of pods to an abstracted service name and IP address. The service provides discovery and routing between the pods. The following service will be called node-exportersvc.yaml, and it will use port 9100.

-

Create the object file and name it

node-exportersvc.yaml:---

kind: Service

apiVersion: v1

metadata:

name: node-exporter

namespace: kube-system

labels:

name: node-exporter

spec:

selector:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: node-exporter

ports:

- name: node-exporter

protocol: TCP

port: 9100

targetPort: 9100 -

Create the service by running the following command:

kubectl apply -f node-exportersvc.yaml -n kube-systemservice/node-exporter created

Create a service monitor

The Service Monitor will scrape the metrics using the following matchLabels and endpoint.

-

Create the object file and name it

node-exporter-svcmonitor.yaml:apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: node-exporter

labels:

prometheus: portworx

spec:

selector:

matchLabels:

name: node-exporter

endpoints:

- port: node-exporter -

Create the

ServiceMonitorobject by running the following command:kubectl apply -f node-exporter-svcmonitor.yaml -n kube-systemservicemonitor.monitoring.coreos.com/node-exporter created -

Verify that the

prometheusobject has the followingserviceMonitorSelector:appended:kubectl get prometheus -n kube-system -o yamlserviceMonitorSelector:

matchExpressions:

- key: prometheus

operator: In

values:

- portworx

- px-backup

The serviceMonitorSelector object is automatically appended to the prometheus object that is deployed by the Portworx Operator.

The ServiceMonitor will match any serviceMonitor that has the key prometheus and value of portworx or backup

View Node Exporter dashboard in Grafana

Log in to the Grafana UI, from Dashboards navigate to the Manage section, and select Portworx Performance Monitor. You can see the dashboards with (Node Exporter):

Grafana dashboards for Portworx

Grafana offers several built-in dashboards for monitoring Portworx. These dashboards provide a real-time view of the system’s performance and status, helping you maintain optimal performance and quickly diagnose any issues.

Etcd dashboard

The Etcd Dashboard provides metrics specific to the etcd component, which is critical for cluster coordination.

Key panels include:

- Disk Sync Duration: Tracks the latency of persisting etcd log entries to disk. High values (> 1s) may indicate issues with the KVDB disk metrics.

- Up: Monitors the health of KVDB nodes.

Portworx Cluster dashboard

This dashboard provides an overview of the cluster's storage and health.

Key panels include:

- Usage Meter: Displays the percentage of utilized storage compared to total capacity.

- Capacity Used: Shows the actual storage space used in the cluster.

- Nodes (total): Displays the number of nodes in the Portworx cluster.

- Storage Providers: Indicates how many storage nodes are currently online.

- Quorum: Tracks the quorum status of the cluster.

- Nodes online: Number of online nodes in the cluster (includes storage and storage-less).

- Avg. Cluster CPU: Monitors the average CPU usage across all nodes.

Portworx Node dashboard

The Node dashboard focuses on individual nodes within the cluster.

Key panels include:

- PWX Disk Usage: Monitors the Portworx storage space used per node.

- PWX Disk IO: Displays the time spent on disk read and write operations per node.

- PWX Disk Throughput: Shows the rate of total bytes read and written for each node.

- PWX Disk Latency: Provides the average time spent on read and write operations for each node.

Portworx Volume dashboard

The Volume dashboard provides insights into the performance and utilization of storage volumes within the cluster. It is divided into two main sections: All Volumes in the Cluster and Individual Volumes, offering a detailed view of both overall and per-volume metrics.

All Volumes in the Cluster

This section displays metrics aggregated across all volumes in the cluster, helping you track overall performance and identify any potential bottlenecks.

- Avg Read Latency (1m): Average time (in seconds) spent on completing read operations during the last minute for all volumes.

- Avg Write Latency (1m): Average time (in seconds) spent on completing write operations during the last minute for all volumes.

- Top n Volumes by Capacity: Lists the top n volumes in the cluster based on their storage capacity.

- Top n Volumes by IO Depth: Lists the top n volumes based on the number of I/O operations currently in progress.

Individual Volumes

This section provides metrics for each individual volume in the cluster, allowing for detailed monitoring of specific volume performance and usage.

- Replication Level (HA): Displays both the current and configured High Availability (HA) level for the volume.

- Avg Read Latency: Average time (in seconds) spent per successfully completed read operation for the volume.

- Avg Write Latency: Average time (in seconds) spent per successfully completed write operation for the volume.

- Volume Usage: Shows the total capacity and the used storage space for the volume.

- Volume Latency: Displays the average time (in seconds) spent per successfully completed read and write operations during the given interval.

- Volume IOPs: Number of successfully completed I/O operations per second for the volume.

- Volume IO Depth: Number of I/O operations currently in progress for the volume.

- Volume IO Throughput: Displays the number of bytes read and written per second for the volume.

Portworx Performance dashboard

The Performance dashboard provides a comprehensive view of the performance metrics for your Portworx cluster. This dashboard helps you monitor the cluster’s overall health, storage usage, and I/O performance, enabling you to quickly identify any issues affecting performance.

Key panels include:

- Members: Displays the total number of nodes in your Portworx cluster.

- Total Volumes: The total number of volumes in the cluster.

- Storage Providers: Number of storage nodes that are currently online.

- Attached Volumes: Indicates the number of volumes that are attached to the nodes.

- Storage Offline: The count of nodes where the storage is either full or down.

- Avg HA Level: The average High Availability (HA) level of all volumes in the cluster.

- Total Available: Displays the total available storage space in the cluster.

- Total Used: The total size of volumes that have been provisioned. This is calculated based on the utilized disk space across all nodes.

- Volume Total Used: Shows the used storage space of all volumes combined.

- Storage Usage: Displays the utilized storage space for each individual node.

- Storage Pending IO: Number of read and write operations that are currently in progress for each node.

Volume-specific metrics

- Latency (Volume): Displays the average time (in seconds) spent per successfully completed read and write operations for each volume during the specified interval.

- Discarded Bytes: The total number of discarded bytes on the volume. These discards are replicated based on the volume’s replication factor. When an application deletes files, the file system converts these deletions into block discards on the Portworx volume.

- PX Pool Write Latency: The write latency experienced by Portworx when writing I/O operations to the page cache.

- PX Pool Write Throughput: The write throughput observed by Portworx, combining all I/O operations across all replicas provisioned on the pool. These represent the application-level I/Os performed on the pool.

- PX Pool Flush Latency: The time taken for Portworx to complete periodic flush/sync operations, which ensure the stability of data and associated metadata in the page cache.

- PX Pool Flush Throughput: The amount of data synced during each flush/sync operation, averaged over the time period.

- Volume IO Throughput: The amount of data being synced by the periodic flush/sync operation, averaged over the interval.

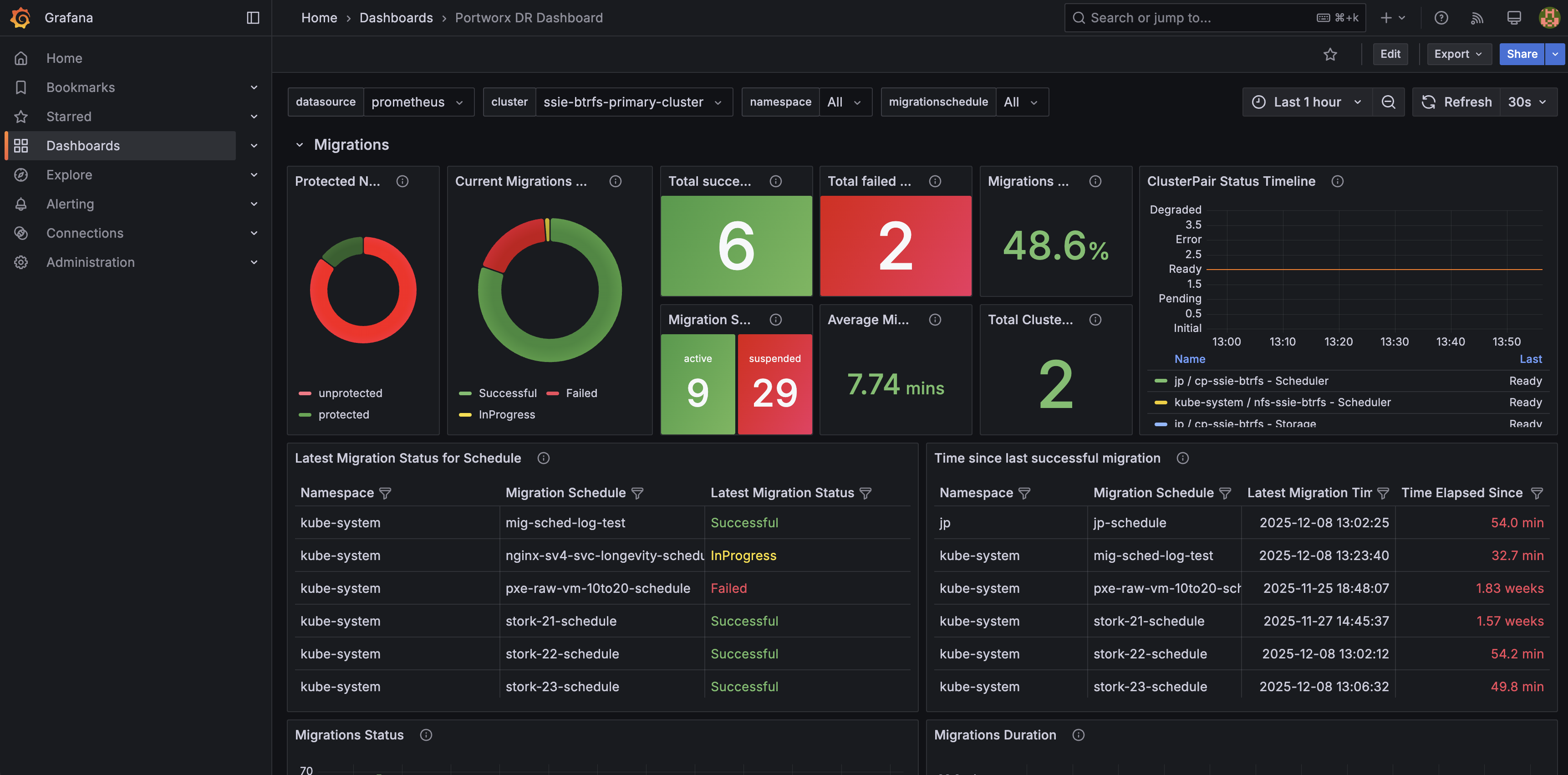

Portworx DR dashboard

The Portworx DR dashboard provides a high-level view of disaster recovery activity orchestrated by Stork, including migrations, failovers, and failbacks between clusters.

Key panels include:

- DR overview: Summarizes the current state of disaster recovery, including recent DR activity and overall health of your DR configuration.

- Migrations: Shows the status and progress of namespace migrations between source and destination clusters so you can quickly spot failures or long‑running operations.

- Failover and failback actions: Highlights recent DR operations across namespaces, helping you verify that planned or automated actions completed successfully.

- Protected namespaces and cluster pairs: Displays which namespaces are protected for DR and the state of cluster pairs between sites.

This dashboard is powered by the Portworx DR and Stork metrics. For details on the underlying metrics, see the Portworx DR and Stork metrics section.

Custom metrics and additional monitoring

Portworx also offers a wide range of custom metrics for monitoring specific aspects of your environment. For more information on available metrics, you can refer to the Portworx Metrics documentation.

Using Grafana to monitor Portworx clusters provides visibility into the health, performance, and usage of your storage environment. With built-in dashboards and customizable metrics, you can quickly identify issues and ensure your infrastructure runs smoothly.

Monitoring via pxctl

Portworx ships with the pxctl CLI out of the box that users can use to perform management operations.

Where do I run pxctl?

You can run pxctl by accessing any worker node in your cluster with ssh or by running the kubectl exec command on any Portworx pod.

Listing Portworx storage pools

Refer to Storage Pools concept.

The following pxctl command lists all the Portworx storage pools in your cluster:

pxctl cluster provision-status

NODE NODE STATUS POOL POOL STATUS IO_PRIORITY SIZE AVAILABLE USED PROVISIONED ZONE REGION RACK

xxxxxxxx-xxxx-xxxx-xxxx-299df278b7d5 Up 0 ( xxxxxxxx-xxxx-xxxx-xxxx-55b59ddd8f2b ) Online HIGH 100 GiB 86 GiB 14 GiB 28 GiB AZ1 default default

xxxxxxxx-xxxx-xxxx-xxxx-6e8e9a0e00fb Up 0 ( xxxxxxxx-xxxx-xxxx-xxxx-00393d023fe1 ) Online HIGH 100 GiB 93 GiB 7.0 GiB 1.0 GiB AZ1 default default

xxxxxxxx-xxxx-xxxx-xxxx-135ef03cfa34 Up 0 ( xxxxxxxx-xxxx-xxxx-xxxx-596c0ceab709 ) Online HIGH 100 GiB 93 GiB 7.0 GiB 0 B AZ1 default default

xxxxxxxx-xxxx-xxxx-xxxx-fa69c643d7bf Up 0 ( xxxxxxxx-xxxx-xxxx-xxxx-1c560f914963 ) Online HIGH 100 GiB 93 GiB 7.0 GiB 0 B AZ1 default default

Monitoring Using Portworx Central

Portworx Central simplifies management, monitoring, and metadata services for one or more Portworx clusters on Kubernetes. Using this single pane of glass, enterprises can easily manage the state of their hybrid- and multi-cloud Kubernetes applications with embedded monitoring and metrics directly in the Portworx user interface.

A Portworx cluster needs to be updated to Portworx Enterprise 2.9 before using Portworx Central.

For more information about installing Portworx Central and its components, refer to the Portworx Central documentation.

Listing Portworx disks (VMDKs)

Where are Portworx VMDKs located?

Portworx creates disks in a folder called osd-provisioned-disks in the ESXi datastore. The names of the VMDK created by Portworx will have a prefix PX-DO-NOT-DELETE-.

The Cloud Drives (ASG) using pxctl CLI command is useful for getting more insight into the disks provisioned by Portworx in a vSphere environment. The following command provides details on all VMware disks (VMDKs) created by Portworx in your cluster:

pxctl clouddrive list

Cloud Drives Summary

Number of nodes in the cluster: 4

Number of drive sets in use: 4

List of storage nodes: [xxxxxxxx-xxxx-xxxx-xxxx-299df278b7d5 xxxxxxxx-xxxx-xxxx-xxxx-6e8e9a0e00fb xxxxxxxx-xxxx-xxxx-xxxx-135ef03cfa34 xxxxxxxx-xxxx-xxxx-xxxx-fa69c643d7bf]

List of storage less nodes: []

Drive Set List

NodeIndex NodeID InstanceID Zone State Drive IDs

2 xxxxxxxx-xxxx-xxxx-xxxx-135ef03cfa34 xxxxxxxx-xxxx-xxxx-xxxx-c7a9708ae56d AZ1 In Use [datastore-589] xxxxxxxx-xxxx-xxxx-xxxx-ac1f6b204d08/PX-DO-NOT-DELETE-xxxxxxxx-xxxx-xxxx-xxxx-2dee113fa334.vmdk(metadata), [datastore-589] xxxxxxxx-xxxx-xxxx-xxxx-ac1f6b204d08/PX-DO-NOT-DELETE-xxxxxxxx-xxxx-xxxx-xxxx-102ec24e44ab.vmdk(data)

3 xxxxxxxx-xxxx-xxxx-xxxx-fa69c643d7bf xxxxxxxx-xxxx-xxxx-xxxx-58a3ea11ce8e AZ1 In Use [datastore-589] xxxxxxxx-xxxx-xxxx-xxxx-ac1f6b204d08/PX-DO-NOT-DELETE-xxxxxxxx-xxxx-xxxx-xxxx-2f0dd2e5a3a4.vmdk(metadata), [datastore-589] xxxxxxxx-xxxx-xxxx-xxxx-ac1f6b204d08/PX-DO-NOT-DELETE-xxxxxxxx-xxxx-xxxx-xxxx-abcb04ce8ffa.vmdk(data)

0 xxxxxxxx-xxxx-xxxx-xxxx-299df278b7d5 xxxxxxxx-xxxx-xxxx-xxxx-85910785c40f AZ1 In Use [datastore-589] xxxxxxxx-xxxx-xxxx-xxxx-ac1f6b204d08/PX-DO-NOT-DELETE-xxxxxxxx-xxxx-xxxx-xxxx-a7b1aa11dad1.vmdk(data), [datastore-589] xxxxxxxx-xxxx-xxxx-xxxx-ac1f6b204d08/PX-DO-NOT-DELETE-xxxxxxxx-xxxx-xxxx-xxxx-3903b92659de.vmdk(metadata)

1 xxxxxxxx-xxxx-xxxx-xxxx-6e8e9a0e00fb xxxxxxxx-xxxx-xxxx-xxxx-d40a2a02b391 AZ1 In Use [datastore-589] xxxxxxxx-xxxx-xxxx-xxxx-ac1f6b204d08/PX-DO-NOT-DELETE-xxxxxxxx-xxxx-xxxx-xxxx-24ddc46fef0a.vmdk(metadata), [datastore-589] xxxxxxxx-xxxx-xxxx-xxxx-ac1f6b204d08/PX-DO-NOT-DELETE-xxxxxxxx-xxxx-xxxx-xxxx-762c6de09f19.vmdk(data)