Installation on an Amazon Elastic Kubernetes Service (EKS) Cluster using Portworx Central

This topic provides instructions for installing Portworx on an Amazon Elastic Kubernetes Service (EKS) cluster using Portworx Central. You can use the installation instructions below to deploy Portworx on AWS Outposts, a kOps-based EKS cluster, Amazon EKS with AWS Auto Scaling groups (ASGs), and EKS-D.

The following collection of tasks describes how to install Portworx on an Amazon EKS cluster using Portworx Central:

- Create an IAM policy

- Attach the IAM policy

- Generate the Portworx specification

- Deploy the Portworx Operator

- Deploy the StorageCluster

- Monitor Portworx nodes

- Verify Portworx pod status

- Verify Portworx cluster status

- Verify Portworx pool status

- Verify pxctl cluster provision status

Complete all the tasks to install Portworx.

Create an IAM policy

Provide permissions for all instances in the Auto Scaling group by creating an IAM role.

Perform the following steps in the AWS Management Console:

- For non-encrypted volumes

- For encrypted volumes

-

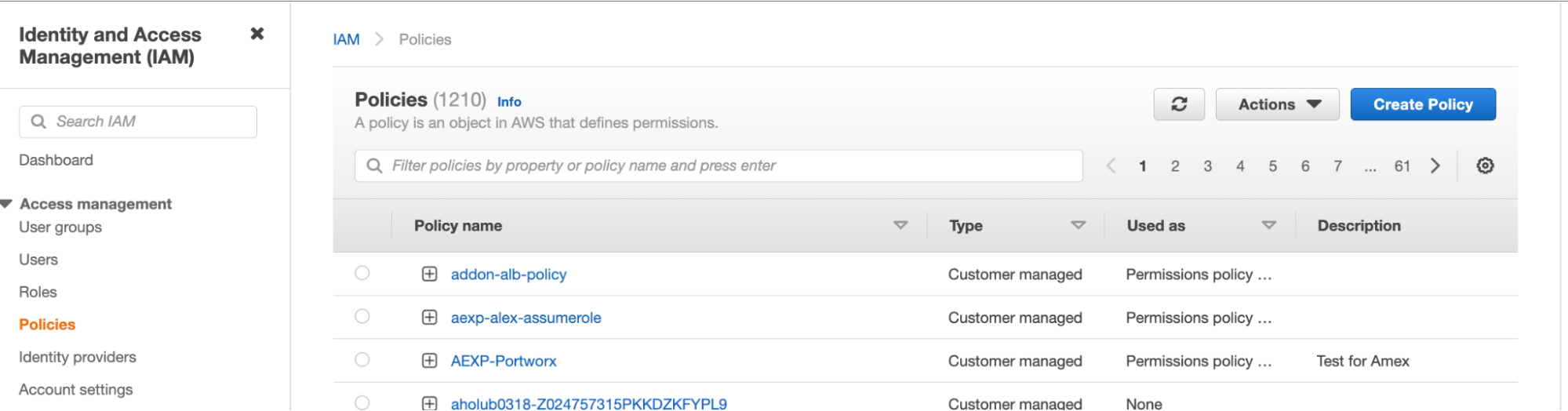

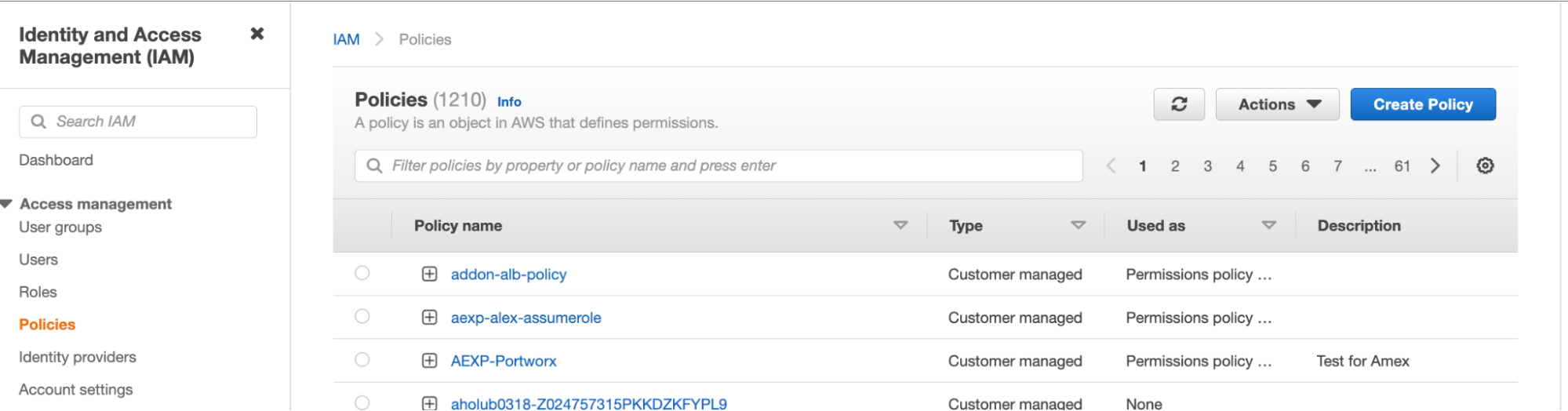

Navigate to the IAM page in the AWS Management Console. Select Policies under the Identity and Access Management (IAM) sidebar section, and then select Create policy in the upper-right corner.

-

Choose the JSON tab, and then paste the following permissions into the editor, providing your own value for

Sidif applicable. You can either use the minimum permissions required or the permissions required for disk encryption.noteThese are the minimum permissions required for storage operations for a Portworx cluster. For the complete set of permissions for all Portworx storage operations, see the credentials reference.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "ec2",

"Effect": "Allow",

"Action": [

"ec2:AttachVolume",

"ec2:ModifyVolume",

"ec2:DetachVolume",

"ec2:CreateTags",

"ec2:CreateVolume",

"ec2:DeleteTags",

"ec2:DeleteVolume",

"ec2:DescribeTags",

"ec2:DescribeVolumeAttribute",

"ec2:DescribeVolumesModifications",

"ec2:DescribeVolumeStatus",

"ec2:DescribeVolumes",

"ec2:DescribeInstances",

"autoscaling:DescribeAutoScalingGroups"

],

"Resource": [

"*"

]

},

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "ec2:DeleteInternetGateway",

"Resource": [

"arn:aws:iam::*:role/eksctl-*",

"arn:aws:ec2:*:*:internet-gateway/*"

]

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "ec2:DeleteInternetGateway",

"Resource": "arn:aws:iam::*:instance-profile/eksctl-*"

},

{

"Sid": "VisualEditor2",

"Effect": "Allow",

"Action": [

"iam:CreateInstanceProfile",

"iam:DeleteInstanceProfile",

"iam:GetRole",

"iam:GetInstanceProfile",

"iam:RemoveRoleFromInstanceProfile",

"iam:CreateRole",

"iam:DeleteRole",

"iam:AttachRolePolicy",

"iam:PutRolePolicy",

"iam:AddRoleToInstanceProfile",

"iam:ListInstanceProfilesForRole",

"iam:PassRole",

"iam:CreateServiceLinkedRole",

"iam:DetachRolePolicy",

"iam:DeleteRolePolicy",

"iam:DeleteServiceLinkedRole",

"iam:GetRolePolicy"

],

"Resource": [

"arn:aws:iam::*:instance-profile/eksctl-*",

"arn:aws:iam::*:role/eksctl-*"

]

},

{

"Sid": "VisualEditor3",

"Effect": "Allow",

"Action": [

"ec2:AuthorizeSecurityGroupIngress",

"ec2:DeleteSubnet",

"ec2:AttachInternetGateway",

"ec2:DescribeSnapshots",

"ec2:DeleteSnapshot",

"ec2:DeleteRouteTable",

"ec2:AssociateRouteTable",

"ec2:DescribeInternetGateways",

"ec2:CreateRoute",

"ec2:CreateInternetGateway",

"ec2:RevokeSecurityGroupEgress",

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:UpdateAutoScalingGroup",

"ec2:DeleteInternetGateway",

"ec2:DescribeKeyPairs",

"ec2:DescribeRouteTables",

"ecr:BatchCheckLayerAvailability",

"ecr:GetLifecyclePolicy",

"ecr:DescribeImageScanFindings",

"ec2:ImportKeyPair",

"ec2:DescribeLaunchTemplates",

"ec2:CreateTags",

"ecr:GetDownloadUrlForLayer",

"ec2:CreateRouteTable",

"cloudformation:*",

"ec2:RunInstances",

"ecr:GetAuthorizationToken",

"ec2:DetachInternetGateway",

"ec2:DisassociateRouteTable",

"ec2:RevokeSecurityGroupIngress",

"ec2:DescribeImageAttribute",

"ecr:BatchGetImage",

"ecr:DescribeImages",

"ec2:DeleteNatGateway",

"ec2:DeleteVpc",

"autoscaling:DeleteAutoScalingGroup",

"eks:*",

"ec2:CreateSubnet",

"ec2:DescribeSubnets",

"autoscaling:CreateAutoScalingGroup",

"ec2:DescribeAddresses",

"ec2:DeleteTags",

"elasticfilesystem:*",

"ec2:CreateNatGateway",

"autoscaling:DescribeLaunchConfigurations",

"ec2:CreateVpc",

"ecr:ListTagsForResource",

"ecr:ListImages",

"ec2:DescribeVpcAttribute",

"ec2:DescribeAvailabilityZones",

"autoscaling:DescribeScalingActivities",

"ec2:CreateSecurityGroup",

"sts:DecodeAuthorizationMessage",

"ec2:CreateSnapshot",

"ec2:ModifyVpcAttribute",

"ecr:DescribeRepositories",

"ec2:ReleaseAddress",

"ec2:AuthorizeSecurityGroupEgress",

"ec2:DeleteLaunchTemplate",

"ec2:DescribeTags",

"ecr:GetLifecyclePolicyPreview",

"ec2:DeleteRoute",

"ec2:DescribeLaunchTemplateVersions",

"ec2:DescribeNatGateways",

"ec2:AllocateAddress",

"ec2:DescribeSecurityGroups",

"ec2:DescribeImages",

"autoscaling:CreateLaunchConfiguration",

"ec2:CreateLaunchTemplate",

"autoscaling:DeleteLaunchConfiguration",

"sts:Get*",

"ec2:DescribeVpcs",

"ec2:DeleteSecurityGroup",

"ecr:GetRepositoryPolicy"

],

"Resource": "*"

},

{

"Sid": "VisualEditor4",

"Effect": "Allow",

"Action": "iam:ListInstanceProfiles",

"Resource": [

"arn:aws:iam::*:instance-profile/eksctl-*",

"arn:aws:iam::*:role/eksctl-*"

]

}

]

} -

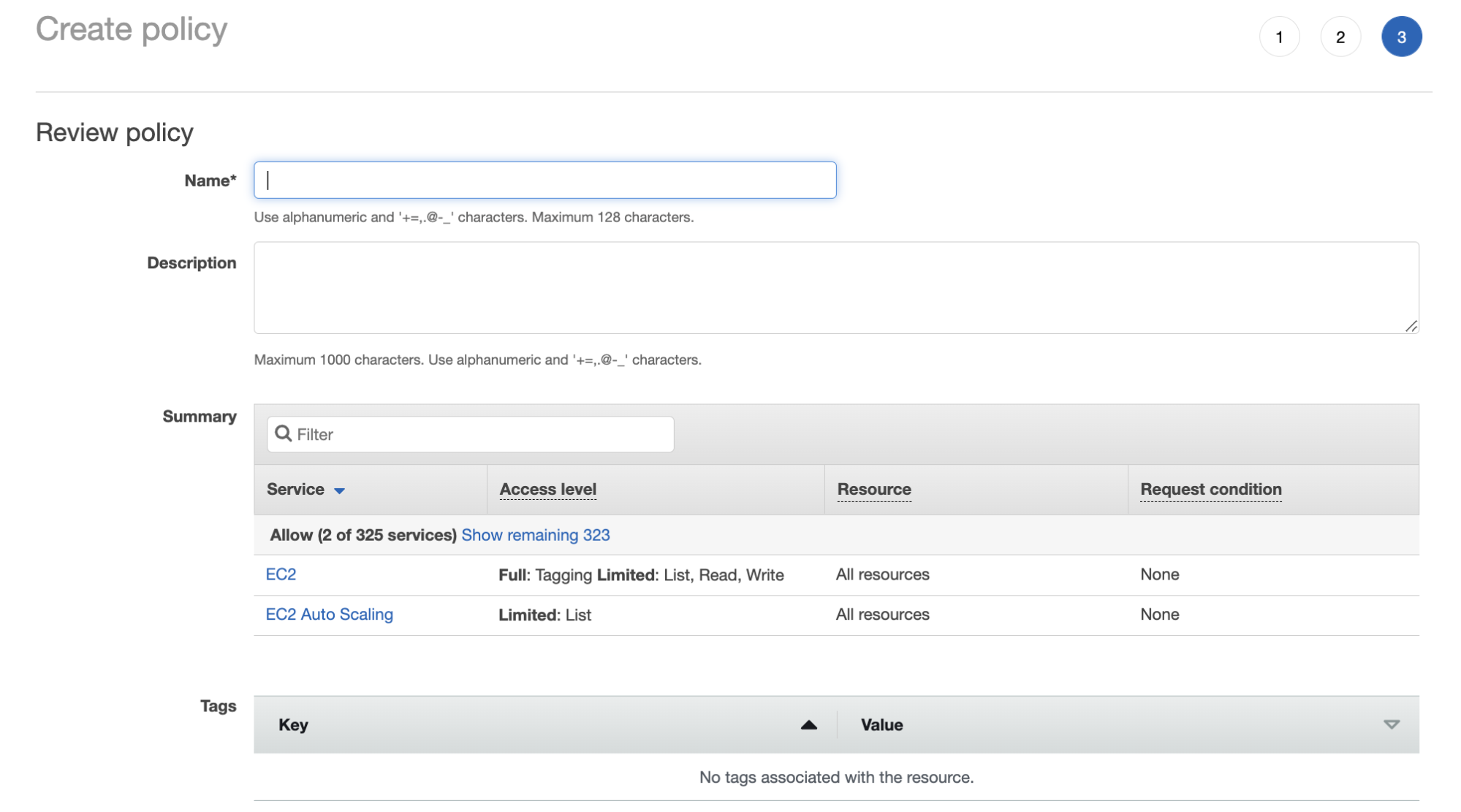

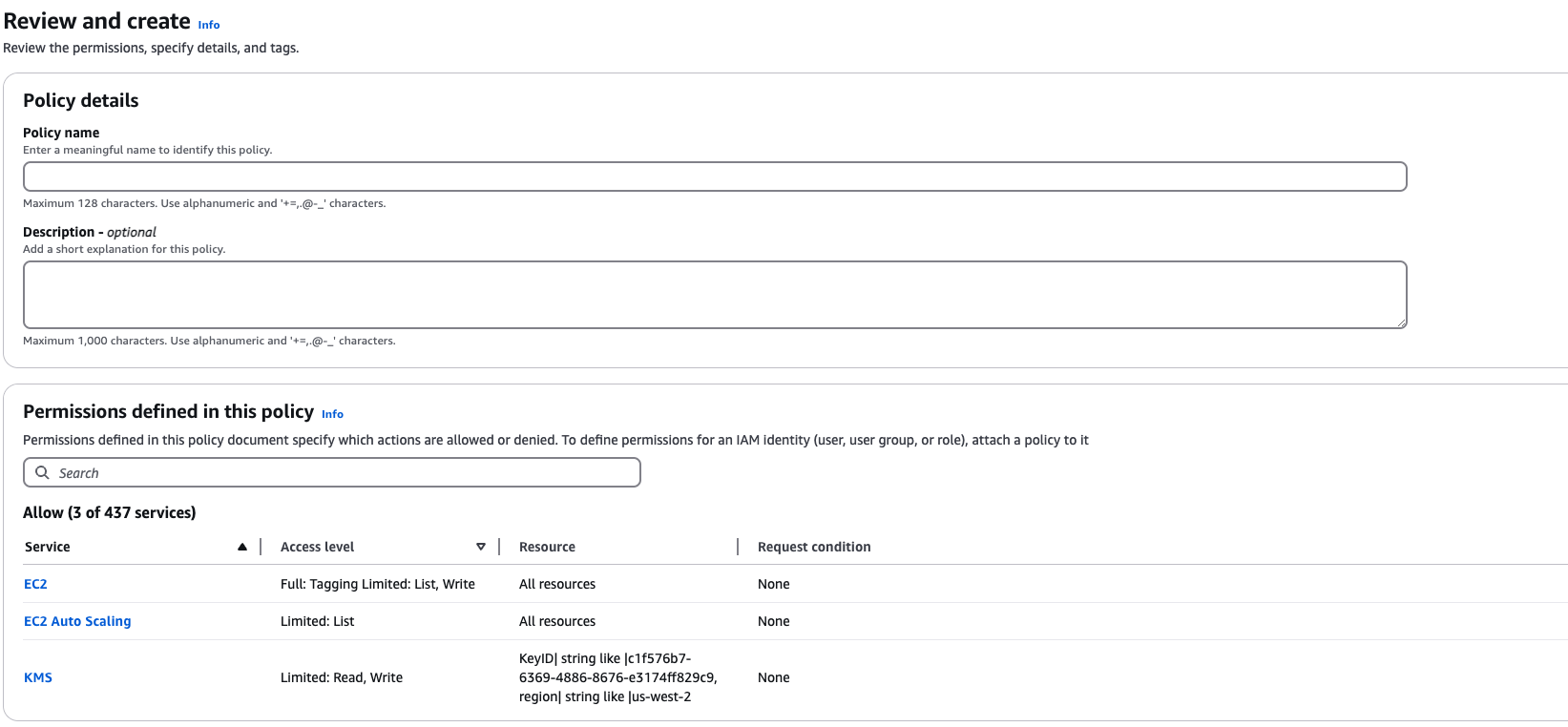

Name the policy and create it.

-

Navigate to the IAM page in the AWS Management Console. Select Policies under the Identity and Access Management (IAM) sidebar section, and then select Create policy in the upper-right corner.

-

Choose the JSON tab, and then paste the following permissions into the editor, providing your own value for

Sidif applicable. You can either use the minimum permissions required or the permissions required for disk encryption.noteThese are the minimum permissions required for storage operations for a Portworx cluster. For the complete set of permissions for all Portworx storage operations, see the credentials reference.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "kms",

"Effect": "Allow",

"Action": [

"kms:Encrypt",

"kms:Decrypt",

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:DescribeKey"

],

"Resource": [

"arn:aws:kms:us-west-2:383347425723:key/c1f576b7-6369-xxxx-xxxx-xxxxxxxxxxxxxx"

]

},

{

"Sid": "ec2",

"Effect": "Allow",

"Action": [

"ec2:AttachVolume",

"ec2:ModifyVolume",

"ec2:DetachVolume",

"ec2:CreateTags",

"ec2:CreateVolume",

"ec2:DeleteTags",

"ec2:DeleteVolume",

"ec2:DescribeTags",

"ec2:DescribeVolumeAttribute",

"ec2:DescribeVolumesModifications",

"ec2:DescribeVolumeStatus",

"ec2:DescribeVolumes",

"ec2:DescribeInstances",

"autoscaling:DescribeAutoScalingGroups"

],

"Resource": [

"*"

]

},

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "ec2:DeleteInternetGateway",

"Resource": [

"arn:aws:iam::*:role/eksctl-*",

"arn:aws:ec2:*:*:internet-gateway/*"

]

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "ec2:DeleteInternetGateway",

"Resource": "arn:aws:iam::*:instance-profile/eksctl-*"

},

{

"Sid": "VisualEditor2",

"Effect": "Allow",

"Action": [

"iam:CreateInstanceProfile",

"iam:DeleteInstanceProfile",

"iam:GetRole",

"iam:GetInstanceProfile",

"iam:RemoveRoleFromInstanceProfile",

"iam:CreateRole",

"iam:DeleteRole",

"iam:AttachRolePolicy",

"iam:PutRolePolicy",

"iam:AddRoleToInstanceProfile",

"iam:ListInstanceProfilesForRole",

"iam:PassRole",

"iam:CreateServiceLinkedRole",

"iam:DetachRolePolicy",

"iam:DeleteRolePolicy",

"iam:DeleteServiceLinkedRole",

"iam:GetRolePolicy"

],

"Resource": [

"arn:aws:iam::*:instance-profile/eksctl-*",

"arn:aws:iam::*:role/eksctl-*"

]

},

{

"Sid": "VisualEditor3",

"Effect": "Allow",

"Action": [

"ec2:AuthorizeSecurityGroupIngress",

"ec2:DeleteSubnet",

"ec2:AttachInternetGateway",

"ec2:DescribeSnapshots",

"ec2:DeleteSnapshot",

"ec2:DeleteRouteTable",

"ec2:AssociateRouteTable",

"ec2:DescribeInternetGateways",

"ec2:CreateRoute",

"ec2:CreateInternetGateway",

"ec2:RevokeSecurityGroupEgress",

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:UpdateAutoScalingGroup",

"ec2:DeleteInternetGateway",

"ec2:DescribeKeyPairs",

"ec2:DescribeRouteTables",

"ecr:BatchCheckLayerAvailability",

"ecr:GetLifecyclePolicy",

"ecr:DescribeImageScanFindings",

"ec2:ImportKeyPair",

"ec2:DescribeLaunchTemplates",

"ec2:CreateTags",

"ecr:GetDownloadUrlForLayer",

"ec2:CreateRouteTable",

"cloudformation:*",

"ec2:RunInstances",

"ecr:GetAuthorizationToken",

"ec2:DetachInternetGateway",

"ec2:DisassociateRouteTable",

"ec2:RevokeSecurityGroupIngress",

"ec2:DescribeImageAttribute",

"ecr:BatchGetImage",

"ecr:DescribeImages",

"ec2:DeleteNatGateway",

"ec2:DeleteVpc",

"autoscaling:DeleteAutoScalingGroup",

"eks:*",

"ec2:CreateSubnet",

"ec2:DescribeSubnets",

"autoscaling:CreateAutoScalingGroup",

"ec2:DescribeAddresses",

"ec2:DeleteTags",

"elasticfilesystem:*",

"ec2:CreateNatGateway",

"autoscaling:DescribeLaunchConfigurations",

"ec2:CreateVpc",

"ecr:ListTagsForResource",

"ecr:ListImages",

"ec2:DescribeVpcAttribute",

"ec2:DescribeAvailabilityZones",

"autoscaling:DescribeScalingActivities",

"ec2:CreateSecurityGroup",

"sts:DecodeAuthorizationMessage",

"ec2:CreateSnapshot",

"ec2:ModifyVpcAttribute",

"ecr:DescribeRepositories",

"ec2:ReleaseAddress",

"ec2:AuthorizeSecurityGroupEgress",

"ec2:DeleteLaunchTemplate",

"ec2:DescribeTags",

"ecr:GetLifecyclePolicyPreview",

"ec2:DeleteRoute",

"ec2:DescribeLaunchTemplateVersions",

"ec2:DescribeNatGateways",

"ec2:AllocateAddress",

"ec2:DescribeSecurityGroups",

"ec2:DescribeImages",

"autoscaling:CreateLaunchConfiguration",

"ec2:CreateLaunchTemplate",

"autoscaling:DeleteLaunchConfiguration",

"sts:Get*",

"ec2:DescribeVpcs",

"ec2:DeleteSecurityGroup",

"ecr:GetRepositoryPolicy"

],

"Resource": "*"

},

{

"Sid": "VisualEditor4",

"Effect": "Allow",

"Action": "iam:ListInstanceProfiles",

"Resource": [

"arn:aws:iam::*:instance-profile/eksctl-*",

"arn:aws:iam::*:role/eksctl-*"

]

}

]

} -

Name the policy and create it.

Attach the IAM policy

Attach the previously created policy to your node instance role or user account.

- Attach policy to a node instance role

- Attach policy to your AWS user account

Follow the instructions below to attach the policy to your NodeInstanceRole:

-

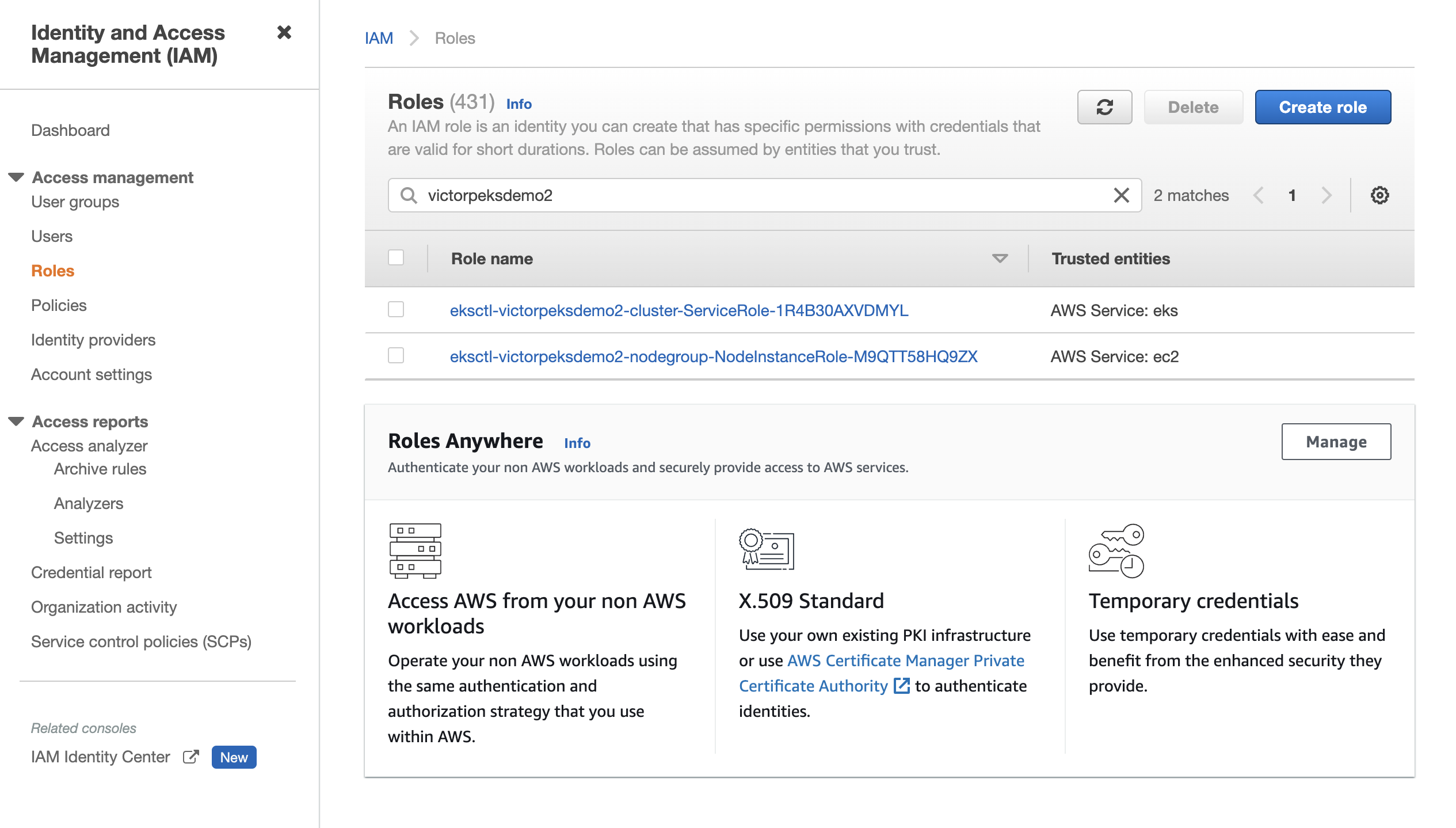

From the IAM page, select Roles in the left pane.

-

On the Roles page, search for and select your node group

NodeInstanceRoleusing your cluster name. The following example showseksctl-victorpeksdemo2-nodegroup-NodeInstanceRole-M9QTT58HQ9ZXas the node group instance role. note

noteIf there is more than one node group

NodeInstanceRolefor your cluster, attach the policy to thoseNodeInstanceRoles as well. -

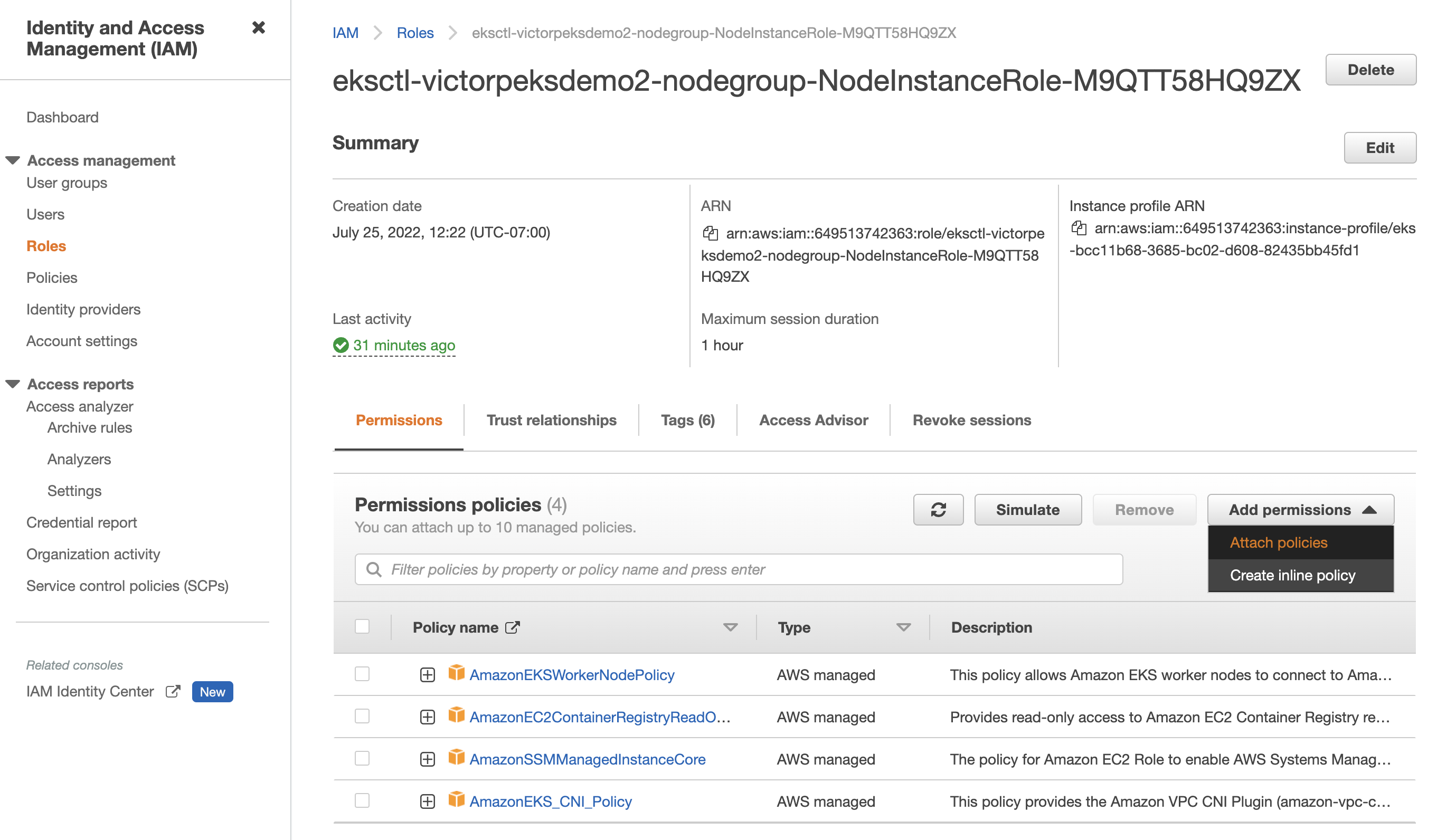

Attach the previously created policy by selecting Attach policies from the Add permissions dropdown on the right side of the screen.

-

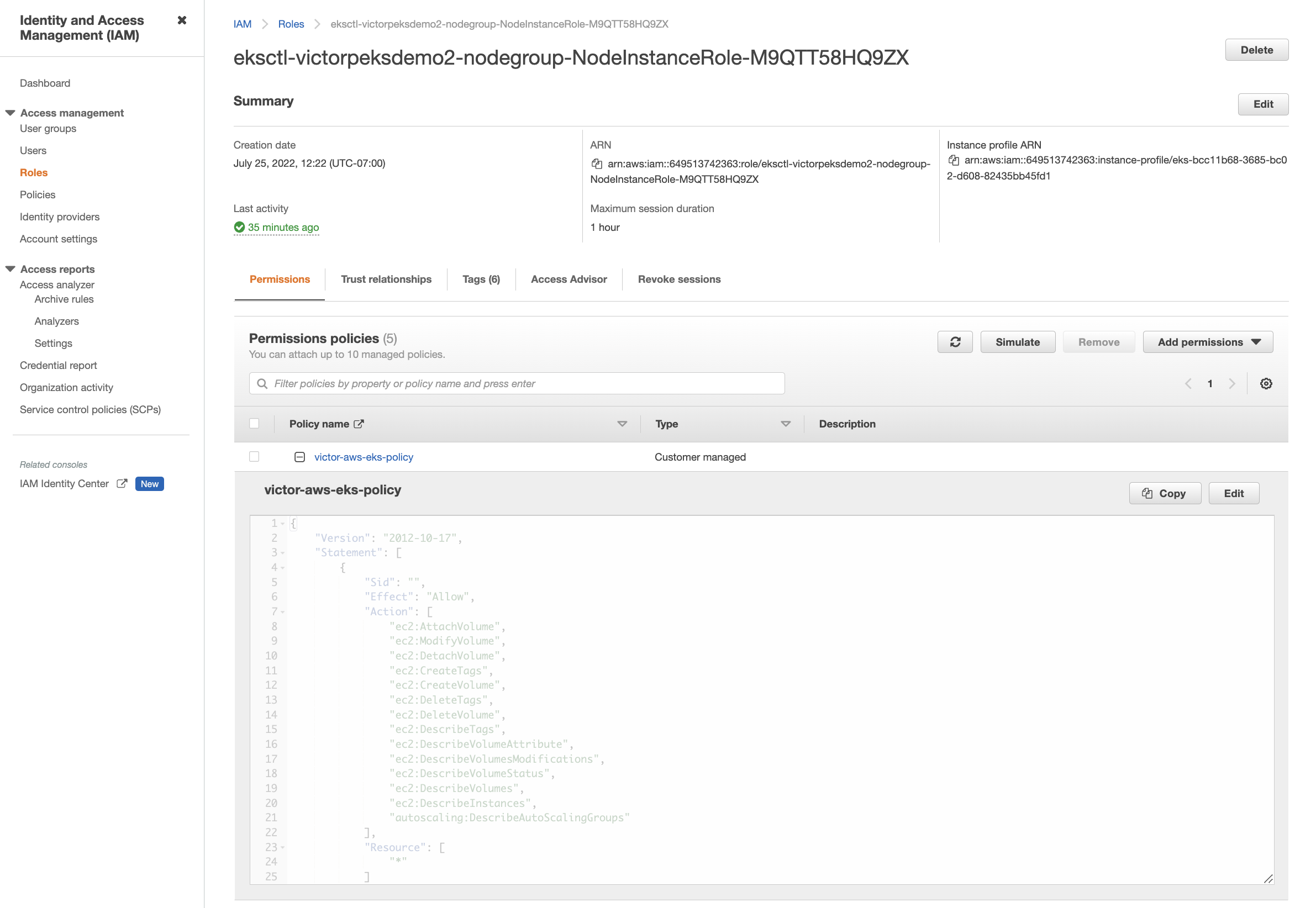

Under Other permissions policies, search for your policy name. Select your policy, and then select Attach policies to attach it.

The policy you attached appears under Permissions policies if successful.

-

From the IAM page, select Users in the left pane.

-

On the Users page, search for and select your AWS user account.

-

On your user account details page, select Add permissions in the upper-right corner of the Permissions policies section.

-

Select Attach policies directly in the Permissions options section.

-

Use the search bar in the Permissions policies section to find and select your previously created policy, and then select Next.

-

Select Add permissions to attach the policy to your AWS user account.

After the policy is successfully attached, you are navigated back to your user account details page and the policy is listed in the Permissions policies section.

Generate the Portworx specification

To install Portworx, you must first generate Kubernetes manifests that you will deploy in your Amazon EKS cluster by following these steps.

-

Sign in to the Portworx Central console.

The system displays the Welcome to Portworx Central! page. -

In the Portworx Enterprise section, select Generate Cluster Spec.

The system displays the Generate Spec page. -

From the Portworx Version dropdown menu, select the Portworx version to install.

-

From the Platform dropdown menu, select AWS.

-

From the Distribution Name dropdown menu, select Elastic Kubernetes Service (EKS).

-

(Optional) To customize the configuration options and generate a custom specification, select Customize and perform the following steps:

noteTo continue without customizing the default configuration or generating a custom specification, proceed to Step 7.

-

Basic tab:

- To use an existing etcd cluster:

- Select Your etcd details.

- In the field provided, enter the host name or IP and port number.

For example,http://test.com.net:1234. - Select one of the following authentication methods:

- Disable HTTPS – Use HTTP for etcd communication.

- Certificate Auth – Use HTTPS with an SSL certificate.

For more information, see Secure your etcd communication. - Password Auth – Use HTTPS with username and password authentication.

- To use an internal Portworx-managed key-value store (kvdb):

- Select Built-in.

note

To restrict Portworx to run internal KVDB only on specific nodes, label those nodes:

kubectl label nodes node1 node2 node3 px/metadata-node=true - To enable TLS-encrypted communication among KVDB nodes and between Portworx nodes and the KVDB cluster, select Enable TLS for internal kvdb.

- If your cluster does not already have a cert-manager, select Deploy Cert-Manager for TLS certificates.

- Select Built-in.

- Select Next.

- To use an existing etcd cluster:

-

Storage tab:

Note: Do not add volumes of different types when configuring storage devices. For example, do not add both GP2 and GP3 or IO1. This can cause performance issues and errors.- To enable Portworx to provision and manage drives automatically:

-

Select Create Using a Spec.

-

The selection between PX-StoreV2 and PX-StoreV1 is automatic. The default datastore is determined by a preflight check that runs across the cluster to assess whether it can deploy Portworx with the PX-StoreV2 datastore. If the preflight check passes for all nodes, PX-StoreV2 is selected by default.

-

From the Add Drive Type dropdown menu, select the EBS volume type to be used by Portworx for data storage.

For PX-StoreV2, four drives are recommended for optimal performance. When specifying EBS volume types, provide the following:- Size (GB)

- If applicable, specify the IOPS required from EBS volume.

- If applicable, specify the Throughput for EBS volume.

- From the Encryption dropdown menu, select one of the following:

- None – Create an unencrypted EBS volume.

- BYOB – Provide an Encryption Key. For more information, see AWS KMS.

- Drive Tags – Enter multiple tags as key-value pairs to be applied to the disks created by Portworx. For more information, see How to assign custom labels to device pools.

You can add multiple drive types by selecting Add Drive Type, or remove a drive type by selecting Remove. You can also add the same drive type with different configurations using + Add Drive.

-

Initial Storage Nodes (Optional): Enter the number of storage nodes that need to be created across zones and node pools.

-

From the Default IO Profile dropdown menu, select Auto.

This enables Portworx to automatically choose the optimal I/O profile based on detected workload patterns. -

From the Journal Device dropdown menu, select one of the following:

- None – Use the default journaling setting.

- Auto – Automatically allocate journal devices.

- Custom – Manually define a journal device.

- Select the EBS Volume Type from the dropdown menu.

- Specify the IOPS required from EBS volume and Throughput for EBS volume.

- From the Encryption dropdown menu, select one of the following:

- None – Create an unencrypted EBS volume.

- BYOB – Provide an Encryption Key. For more information, see AWS KMS.

- Drive Tags – Enter multiple tags as key-value pairs to be applied to the disks created by Portworx. For more information, see How to assign custom labels to device pools.

-

Select Enable Workload Identity to deploy Portworx on an EKS-D cluster.

Enter the AWS Workload Identity IAM Role ARN to allow Portworx to access AWS resources. Ensure you created the IAM role with the correct AWS Workload Identity Key.

-

- To enable Portworx to use all available, unused, and unmounted drives on the node:

- Select Consume Unused.

- (Optional) To designate PX-StoreV1 as the datastore, clear the PX-StoreV2 checkbox. By default, the system selects PX-StoreV2 as the datastore.

- For PX-StoreV2, in Metadata Path, enter a pre-provisioned path for storing the Portworx metadata.

The path must be at least 64 GB. - In Drive/Device, specify the block drive(s) that Portworx uses for data storage.

- From the Journal Device dropdown menu, select one of the following:

- None – Use the default journaling setting.

- Auto – Automatically allocate journal devices.

- Custom – Manually enter a journal device path.

Enter the path of the journal device in Journal Device Path.

- Select the Use unmounted disks even if they have a partition or filesystem on it. Portworx will never use a drive or partition that is mounted checkbox to use unmounted disks, even if they contain a partition or filesystem.

Portworx will not use any mounted drive or partition.

- To manually specify the drives on the node for Portworx to use, do the following:

- Select the Use Existing Drives option.

- (Optional) To designate PX-StoreV1 as the datastore, clear the PX-StoreV2 checkbox. By default, the system selects PX-StoreV2 as the datastore.

- For PX-StoreV2, in the Metadata Path field, enter a pre-provisioned path for storing the Portworx metadata.

The path must be at least 64 GB in size. - In Drive/Device, specify the block drive(s) that Portworx uses for data storage.

- In Pool Label, assign a custom label in

key:valueformat to identify and categorize storage pools. - From the Journal Device dropdown menu, select one of the following:

- None – Use the default journaling setting.

- Auto – Automatically allocate journal devices.

- Custom – Manually enter a journal device path.

Enter the path of the journal device in Journal Device Path.

- Select Next.

- To enable Portworx to provision and manage drives automatically:

-

Network tab:

- In Interface(s):

- Enter the Data Network Interface to be used for data traffic.

- Enter the Management Network Interface to be used for management traffic.

- In Advanced Settings:

- Enter the Starting port for Portworx services.

- Select Next.

- In Interface(s):

-

Customize tab:

- Choose the Kubernetes platform in the Customize section.

- In the Environment Variables section, enter name-value pairs in the respective fields.

- For Disaggregated installation you need to set node labels and

ENABLE_ASG_STORAGE_PARTITIONINGenvironment variable totrue. For more information, see Deployment planning.

- In the Registry and Image Settings section:

- Enter the Custom Container Registry Location to download the Docker images.

- Enter the Kubernetes Docker Registry Secret used to authenticate to the custom container registry.

- From Image Pull Policy, select Default, Always, IfNotPresent, or Never.

This policy influences how images are managed on the node and when updates are applied.

- In Security Settings, select Enable Authorization to enable Role-Based Access Control (RBAC) and secure access to storage resources in your cluster.

- In the Advanced Settings section:

- Select the Enable Stork checkbox to enable Stork.

- Select the Enable CSI checkbox to enable CSI.

- Select the Enable Monitoring checkbox to enable monitoring for user-defined projects before installing Portworx Operator.

- Select the Enable Telemetry checkbox to enable telemetry in the StorageCluster spec.

For more information, see Enable Pure1 integration for upgrades on Amazon EKS. - Enter the prefix for the Portworx cluster name in the Cluster Name Prefix field.

- Select the Secrets Store Type from the dropdown menu to store and manage secure information for features such as CloudSnaps and Encryption.

- Select Finish.

- On the summary page, enter a name for the specification in Spec Name, and tags in Spec Tags.

- Select Download .yaml to download the YAML file with the customized specification or Save Spec to save the specification.

-

-

Select Save & Download to generate the specification.

Deploy the Portworx Operator

Use the operator specification you generated in the Generate the Portworx specification section, and deploy the Portworx Operator by running the following command.

kubectl apply -f 'https://install.portworx.com/<version-number>?comp=pxoperator'

serviceaccount/portworx-operator created

podsecuritypolicy.policy/px-operator created

clusterrole.rbac.authorization.k8s.io/portworx-operator created

clusterrolebinding.rbac.authorization.k8s.io/portworx-operator created

deployment.apps/portworx-operator created

Deploy the StorageCluster

Use the StorageCluster specification you generated in the Generate the Portworx specification section, and deploy the StorageCluster by running the following command.

kubectl apply -f 'https://install.portworx.com/<version-number>?operator=true&mc=false&kbver=&b=true&kd=type%3Dgp2%2Csize%3D150&s=%22type%3Dgp2%2Csize%3D150%22&c=px-cluster-XXXX-XXXX&eks=true&stork=true&csi=true&mon=true&tel=false&st=k8s&e==AWS_ACCESS_KEY_ID%3XXXX%2CAWS_SECRET_ACCESS_KEY%3XXXX&promop=true'

storagecluster.core.libopenstorage.org/px-cluster-xxxxxxxx-xxxx-xxxx-xxxx-8dfd338e915b created

Monitor Portworx nodes

-

Enter the following

kubectl getcommand and wait until all Portworx nodes show asReadyorOnlinein the output:kubectl -n <px-namespace> get storagenodes -l name=portworxNAME ID STATUS VERSION AGE

username-k8s1-node0 xxxxxxxx-xxxx-xxxx-xxxx-43cf085e764e Online 2.11.1-3a5f406 4m52s

username-k8s1-node1 xxxxxxxx-xxxx-xxxx-xxxx-4597de6fdd32 Online 2.11.1-3a5f406 4m52s

username-k8s1-node2 xxxxxxxx-xxxx-xxxx-xxxx-e2169ffa111c Online 2.11.1-3a5f406 4m52s -

Enter the following

kubectl describecommand with theNAMEof one of the Portworx nodes you retrieved above to show the current installation status for individual nodes:kubectl -n <px-namespace> describe storagenode <portworx-node-name>...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal PortworxMonitorImagePullInPrgress 7m48s portworx, k8s-node-2 Portworx image portworx/px-enterprise:2.10.1.1 pull and extraction in progress

Warning NodeStateChange 5m26s portworx, k8s-node-2 Node is not in quorum. Waiting to connect to peer nodes on port 9002.

Normal NodeStartSuccess 5m7s portworx, k8s-node-2 PX is ready on this node

Verify Portworx pod status

Enter the following command to list and filter the results for Portworx pods and specify the namespace where you deployed Portworx:

kubectl get pods -n <px-namespace> -o wide | grep -e portworx -e px

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

portworx-api-8scq2 1/1 Running 1 (90m ago) 5h1m xx.xx.xxx.xxx username-vms-silver-sight-0 <none> <none>

portworx-api-f24b9 1/1 Running 1 (108m ago) 5h1m xx.xx.xxx.xxx username-vms-silver-sight-3 <none> <none>

portworx-api-f95z5 1/1 Running 1 (90m ago) 5h1m xx.xx.xxx.xxx username-vms-silver-sight-2 <none> <none>

portworx-kvdb-558g5 1/1 Running 0 3m46s xx.xx.xxx.xxx username-vms-silver-sight-2 <none> <none>

portworx-kvdb-9tfjd 1/1 Running 0 2m57s xx.xx.xxx.xxx username-vms-silver-sight-0 <none> <none>

portworx-kvdb-cjcxg 1/1 Running 0 3m7s xx.xx.xxx.xxx username-vms-silver-sight-3 <none> <none>

portworx-operator-548b8d4ccc-qgnkc 1/1 Running 13 (4m26s ago) 5h2m xx.xx.xxx.xxx username-vms-silver-sight-0 <none> <none>

portworx-pvc-controller-ff669698-62ngd 1/1 Running 1 (108m ago) 5h1m xx.xx.xxx.xxx username-vms-silver-sight-3 <none> <none>

portworx-pvc-controller-ff669698-6b4zj 1/1 Running 1 (90m ago) 5h1m xx.xx.xxx.xxx username-vms-silver-sight-2 <none> <none>

portworx-pvc-controller-ff669698-pffvl 1/1 Running 1 (90m ago) 5h1m xx.xx.xxx.xxx username-vms-silver-sight-0 <none> <none>

prometheus-px-prometheus-0 2/2 Running 2 (90m ago) 5h xx.xx.xxx.xxx username-vms-silver-sight-0 <none> <none>

px-cluster-xxxxxxxx-xxxx-xxxx-xxxx-fab038f0bbe6-2qsp4 2/2 Running 13 (108m ago) 3h20m xx.xx.xxx.xxx username-vms-silver-sight-3 <none> <none>

px-cluster-xxxxxxxx-xxxx-xxxx-xxxx-fab038f0bbe6-5vnzv 2/2 Running 16 (90m ago) 3h20m xx.xx.xxx.xxx username-vms-silver-sight-0 <none> <none>

px-cluster-xxxxxxxx-xxxx-xxxx-xxxx-fab038f0bbe6-lxzd5 2/2 Running 16 (90m ago) 3h20m xx.xx.xxx.xxx username-vms-silver-sight-2 <none> <none>

px-csi-ext-77fbdcdcc9-7hkpm 4/4 Running 4 (108m ago) 3h19m xx.xx.xxx.xxx username-vms-silver-sight-3 <none> <none>

px-csi-ext-77fbdcdcc9-9ck26 4/4 Running 4 (90m ago) 3h18m xx.xx.xxx.xxx username-vms-silver-sight-0 <none> <none>

px-csi-ext-77fbdcdcc9-ddmjr 4/4 Running 14 (90m ago) 3h20m xx.xx.xxx.xxx username-vms-silver-sight-2 <none> <none>

px-prometheus-operator-7d884bc8bc-5sv9r 1/1 Running 1 (90m ago) 5h1m xx.xx.xxx.xxx username-vms-silver-sight-0 <none> <none>

Note the name of one of your px-cluster pods. You will run pxctl commands from these pods in the following steps.

Verify Portworx cluster status

You can find the status of the Portworx cluster by running pxctl status commands from a pod.

Enter the following kubectl exec command, specifying the pod name you retrieved in Verify Portworx Pod Status:

kubectl exec <px-pod> -n <px-namespace> -- /opt/pwx/bin/pxctl status

Defaulted container "portworx" out of: portworx, csi-node-driver-registrar

Status: PX is operational

Telemetry: Disabled or Unhealthy

Metering: Disabled or Unhealthy

License: Trial (expires in 31 days)

Node ID: xxxxxxxx-xxxx-xxxx-xxxx-bf578f9addc1

IP: xx.xx.xxx.xxx

Local Storage Pool: 1 pool

POOL IO_PRIORITY RAID_LEVEL USABLE USED STATUS ZONE REGION

0 HIGH raid0 25 GiB 33 MiB Online default default

Local Storage Devices: 1 device

Device Path Media Type Size Last-Scan

0:0 /dev/sda STORAGE_MEDIUM_SSD 32 GiB 10 Oct 22 23:45 UTC

total - 32 GiB

Cache Devices:

* No cache devices

Kvdb Device:

Device Path Size

/dev/sdc 1024 GiB

* Internal kvdb on this node is using this dedicated kvdb device to store its data.

Metadata Device:

1 /dev/sdd STORAGE_MEDIUM_SSD 64 GiB

Cluster Summary

Cluster ID: px-cluster-xxxxxxxx-xxxx-xxxx-xxxx-fab038f0bbe6

Cluster UUID: xxxxxxxx-xxxx-xxxx-xxxx-5d610fa334bd

Scheduler: kubernetes

Nodes: 3 node(s) with storage (3 online)

IP ID SchedulerNodeName Auth StorageNode Used Capacity Status StorageStatus Version Kernel OS

xx.xx.xxx.xxx xxxxxxxx-xxxx-xxxx-xxxx-bf578f9addc1 username-vms-silver-sight-3 Disabled Yes(PX-StoreV2) 33 MiB 25 GiB Online Up (This node) 2.12.0-28944c8 5.4.217-1.el7.elrepo.x86_64 CentOS Linux 7 (Core)

xx.xx.xxx.xxx xxxxxxxx-xxxx-xxxx-xxxx-4a1bafeff5bc username-vms-silver-sight-0 Disabled Yes(PX-StoreV2) 33 MiB 25 GiB Online Up 2.12.0-28944c8 5.4.217-1.el7.elrepo.x86_64 CentOS Linux 7 (Core)

xx.xx.xxx.xxx xxxxxxxx-xxxx-xxxx-xxxx-502e658bc307 username-vms-silver-sight-2 Disabled Yes(PX-StoreV2) 33 MiB 25 GiB Online Up 2.12.0-28944c8 5.4.217-1.el7.elrepo.x86_64 CentOS Linux 7 (Core)

Global Storage Pool

Total Used : 99 MiB

Total Capacity : 74 GiB

The status displays PX is operational when the cluster is running as expected. For each node, the StorageNode column reads Yes(PX-StoreV2).

Verify Portworx pool status

Run the following command to view the Portworx drive configurations for your pod:

kubectl exec <px-pod> -n <px-namespace> -- /opt/pwx/bin/pxctl service pool show

Defaulted container "portworx" out of: portworx, csi-node-driver-registrar

PX drive configuration:

Pool ID: 0

Type: PX-StoreV2

UUID: xxxxxxxx-xxxx-xxxx-xxxx-db8abe01d4f0

IO Priority: HIGH

Labels: kubernetes.io/arch=amd64,kubernetes.io/hostname=username-vms-silver-sight-3,kubernetes.io/os=linux,medium=STORAGE_MEDIUM_SSD,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,iopriority=HIGH

Size: 25 GiB

Status: Online

Has metadata: No

Balanced: Yes

Drives:

0: /dev/sda, Total size 32 GiB, Online

Cache Drives:

No Cache drives found in this pool

Metadata Device:

1: /dev/sdd, STORAGE_MEDIUM_SSD

The output Type: PX-StoreV2 ensures that the pod uses the PX-StoreV2 datastore.

Verify pxctl cluster provision status

-

Access the Portworx CLI.

-

Run the following command to find the storage cluster:

kubectl -n <px-namespace> get storageclusterNAME CLUSTER UUID STATUS VERSION AGE

px-cluster-xxxxxxxx-xxxx-xxxx-xxxx-fab038f0bbe6 xxxxxxxx-xxxx-xxxx-xxxx-5d610fa334bd Online 2.12.0-dev-rc1 5h6mThe status must display

Online. -

Run the following command to find the storage nodes:

kubectl -n <px-namespace> get storagenodesNAME ID STATUS VERSION AGE

username-vms-silver-sight-0 xxxxxxxx-xxxx-xxxx-xxxx-4a1bafeff5bc Online 2.12.0-28944c8 3h25m

username-vms-silver-sight-2 xxxxxxxx-xxxx-xxxx-xxxx-502e658bc307 Online 2.12.0-28944c8 3h25m

username-vms-silver-sight-3 xxxxxxxx-xxxx-xxxx-xxxx-bf578f9addc1 Online 2.12.0-28944c8 3h25mThe status must display

Online. -

Verify the Portworx cluster provision status by running the following command.

Specify the pod name you retrieved in Verify Portworx Pod Status.kubectl exec <px-pod> -n <px-namespace> -- /opt/pwx/bin/pxctl cluster provision-statusNODE NODE STATUS POOL POOL STATUS IO_PRIORITY SIZE AVAILABLE USED PROVISIONED ZONE REGION RACK

xxxxxxxx-xxxx-xxxx-xxxx-502e658bc307 Up 0 ( xxxxxxxx-xxxx-xxxx-xxxx-f9131bf7ef9d ) Online HIGH 32 GiB 32 GiB 33 MiB 0 B default default default

xxxxxxxx-xxxx-xxxx-xxxx-4a1bafeff5bc Up 0 ( xxxxxxxx-xxxx-xxxx-xxxx-434152789beb ) Online HIGH 32 GiB 32 GiB 33 MiB 0 B default default default

xxxxxxxx-xxxx-xxxx-xxxx-bf578f9addc1 Up 0 ( xxxxxxxx-xxxx-xxxx-xxxx-db8abe01d4f0 ) Online HIGH 32 GiB 32 GiB 33 MiB 0 B default default default

What to do next

Create a PVC. For more information, see Create your first PVC.