Install Portworx in OCP bare metal

This page provides instructions for installing Portworx on OpenShift running on baremetal

Prerequisites

- You must have a OpenShift cluster deployed on infrastructure that meets the minimum requirements for Portworx.

- You must attach the backing storage disks to each worker node.

- You must have dedicated disk for internal kvdb.

- The KVDB device given above needs to be present only on 3 of your nodes and it should have a unique device name across all the KVDB nodes.

- Network:

- Ports 17001 - 17020 opened for Portworx node to node communication

- Ports 111, 2049, and 20048 opened for sharedv4 volumes support (NFSv3)

- Port 2049 (NFS server) opened only if using sharedv4 services (NFSv4)

Create a monitoring ConfigMap

Newer OpenShift versions do not support the Portworx Prometheus deployment. As a result, you must enable monitoring for user-defined projects before installing the Portworx Operator. Use the instructions in this section to configure the OpenShift Prometheus deployment to monitor Portworx metrics.

To integrate OpenShift’s monitoring and alerting system with Portworx, create a cluster-monitoring-config ConfigMap in the openshift-monitoring namespace:

apiVersion: v1

kind: ConfigMap

metadata:

name: cluster-monitoring-config

namespace: openshift-monitoring

data:

config.yaml: |

enableUserWorkload: true

The enableUserWorkload parameter enables monitoring for user-defined projects in the OpenShift cluster. This creates a prometheus-operated service in the openshift-user-workload-monitoring namespace.

Deploy Portworx

Follow the instructions in this section to deploy Portworx.

Install Portworx Operator using the OpenShift UI

-

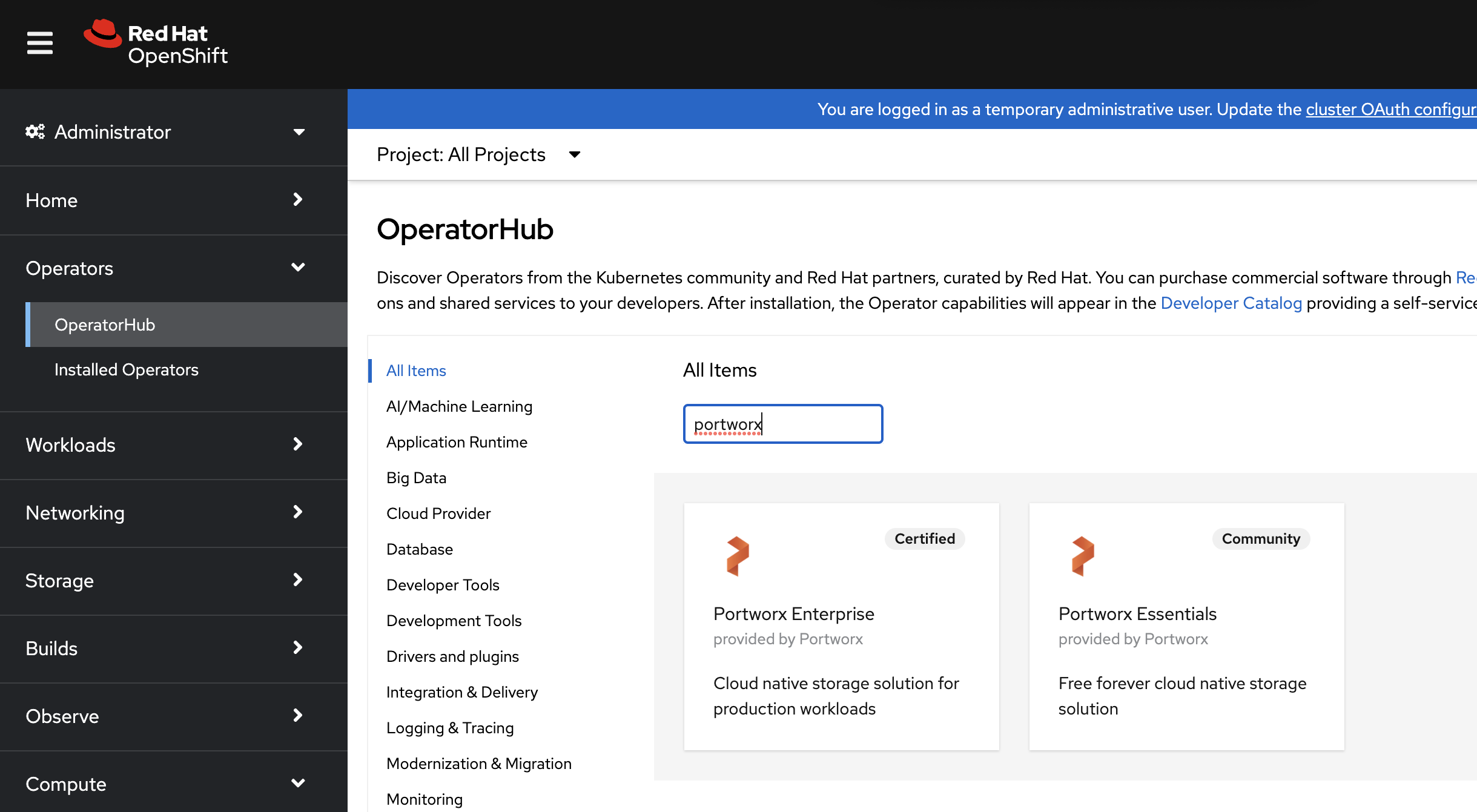

From your OpenShift UI, select OperatorHub in the left pane.

-

On the OperatorHub page, search for Portworx and select the Portworx Enterprise card:

-

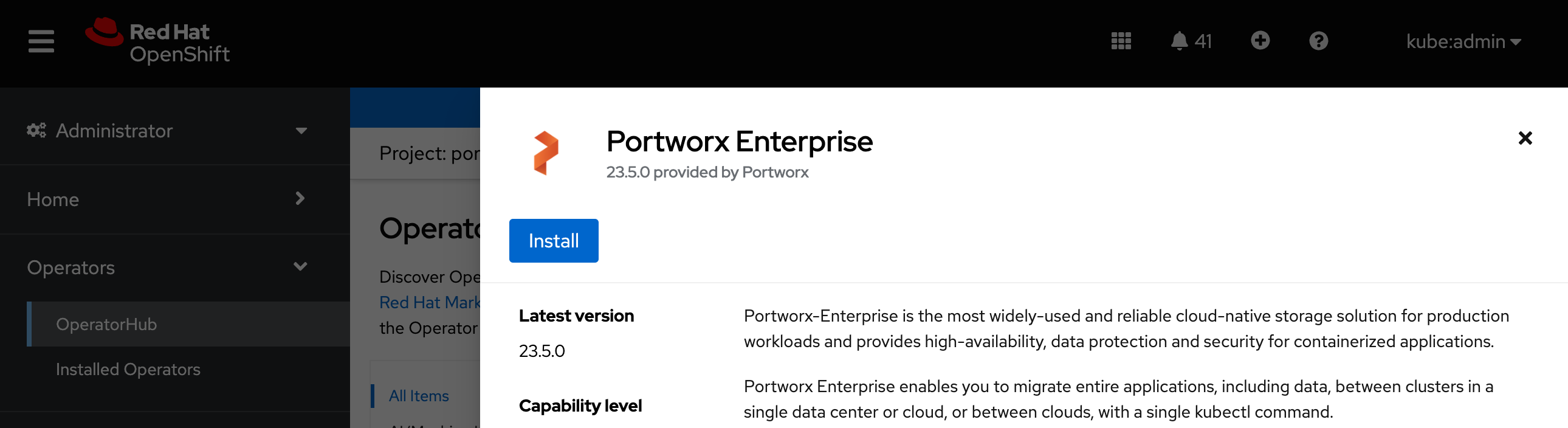

Click Install to install Portworx Operator:

-

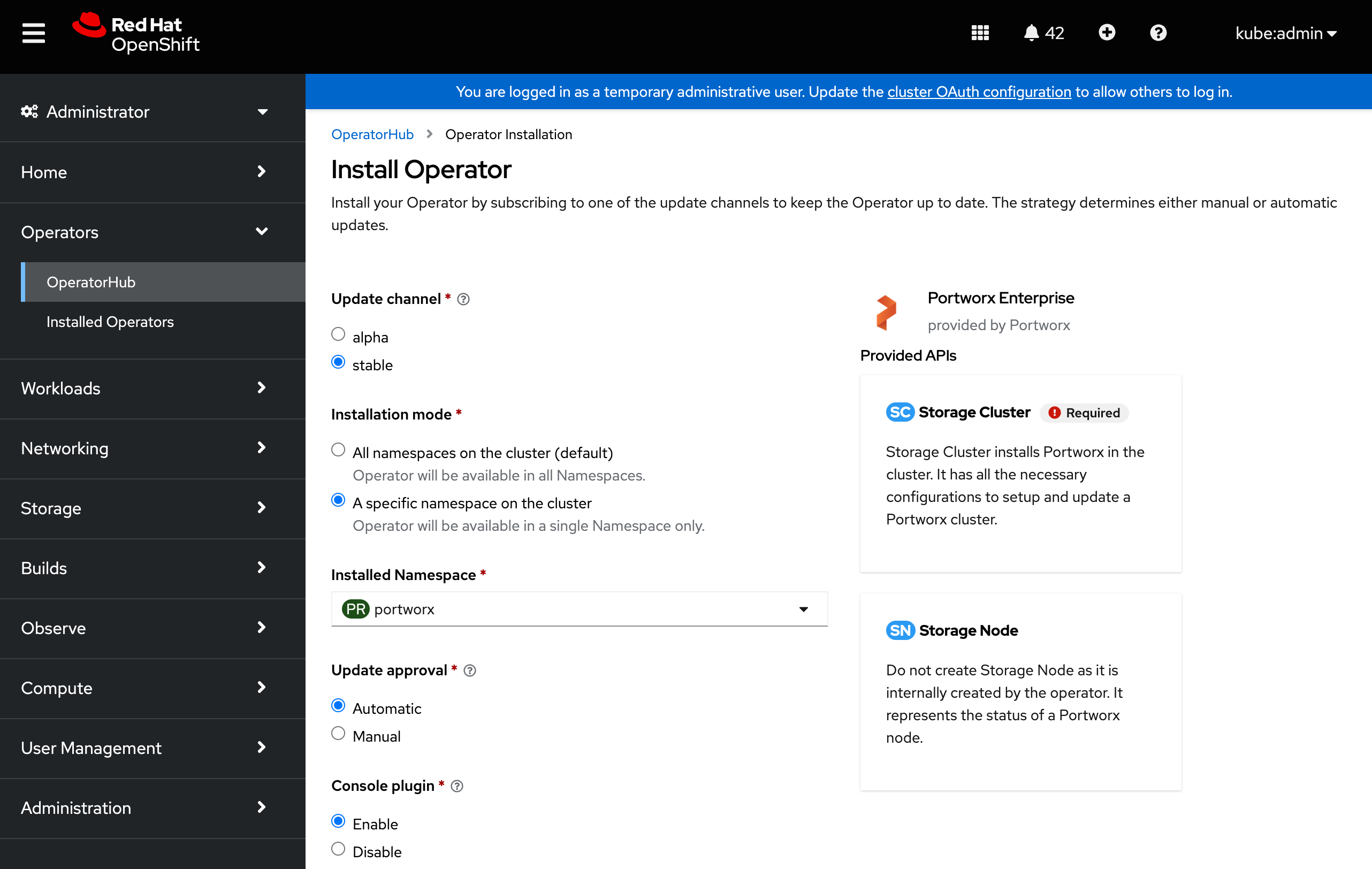

Portworx Operator begins to install and takes you to the Install Operator page. On this page:

- Select the A specific namespace on the cluster option for Installation mode.

- Choose the Create Project option from the Installed Namespace dropdown.

-

In the Create Project window, provide the name

portworxand click Create to create a namespace called portworx. -

To manage your Portworx cluster using the Portworx dashboard within the OpenShift UI, select Enable for the Console plugin option.

-

Click Install to deploy Portworx Operator in the

portworxnamespace.

Generate Portworx spec

-

Sign in to the Portworx Central console.

The system displays the Welcome to Portworx Central! page. -

In the Portworx Enterprise section, select Generate Cluster Spec.

The system displays the Generate Spec page. -

From the Portworx Version dropdown menu, select the Portworx version to install.

-

For Platform, choose DAS/. Select OpenShift4+ for Distribution Name, then click Save Spec to generate the specs.

Deploy Portworx using OpenShift UI

-

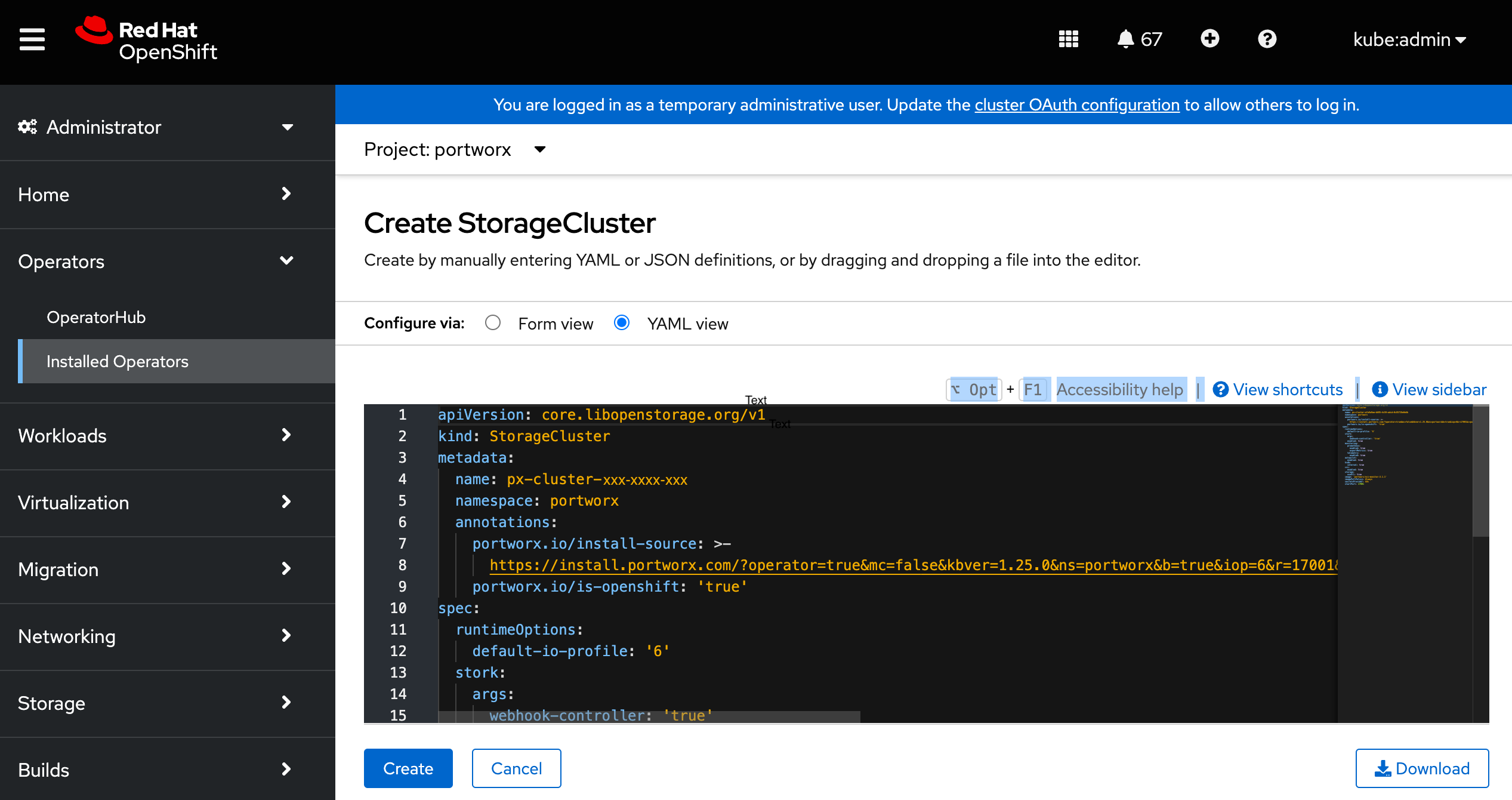

Once the Operator is successfully installed, a Create StorageCluster button appears. Click the button to create a StorageCluster object:

-

On the Create StorageCluster page, choose YAML view to configure the StorageCluster object.

-

Copy and paste the Portworx spec that you generated in the Generate Portworx spec section into the text editor and click Create to deploy Portworx:

-

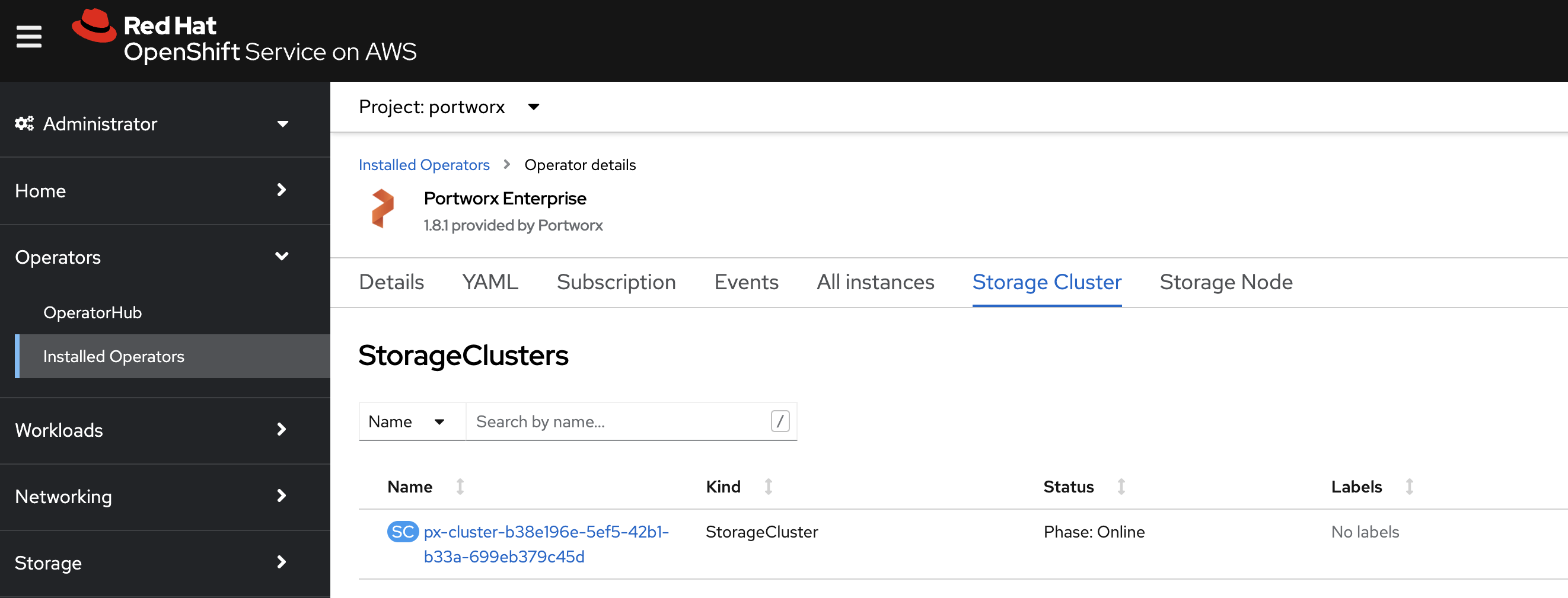

Verify that Portworx has deployed successfully by navigating to the Storage Cluster tab of the Installed Operators page. Once Portworx has fully deployed, the status will show as Online:

Verify your Portworx installation

Once you've installed Portworx, you can perform the following tasks to verify that Portworx has installed correctly.

Verify if all pods are running

Enter the following oc get pods command to list and filter the results for Portworx pods:

oc get pods -n portworx -o wide | grep -e portworx -e px

portworx-api-774c2 1/1 Running 0 2m55s 192.168.121.196 username-k8s1-node0 <none> <none>

portworx-api-t4lf9 1/1 Running 0 2m55s 192.168.121.99 username-k8s1-node1 <none> <none>

portworx-kvdb-94bpk 1/1 Running 0 4s 192.168.121.196 username-k8s1-node0 <none> <none>

portworx-operator-xxxx-xxxxxxxxxxxxx 1/1 Running 0 4m1s 10.244.1.99 username-k8s1-node0 <none> <none>

prometheus-px-prometheus-0 2/2 Running 0 2m41s 10.244.1.105 username-k8s1-node0 <none> <none>

px-cluster-1c3edc42-4541-48fc-b173-xxxx-xxxxxxxxxxxxx 2/2 Running 0 2m55s 192.168.121.196 username-k8s1-node0 <none> <none>

px-cluster-1c3edc42-4541-48fc-b173-xxxx-xxxxxxxxxxxxx 1/2 Running 0 2m55s 192.168.121.99 username-k8s1-node1 <none> <none>

px-csi-ext-868fcb9fc6-xxxxx 4/4 Running 0 3m5s 10.244.1.103 username-k8s1-node0 <none> <none>

px-csi-ext-868fcb9fc6-xxxxx 4/4 Running 0 3m5s 10.244.1.102 username-k8s1-node0 <none> <none>

px-csi-ext-868fcb9fc6-xxxxx 4/4 Running 0 3m5s 10.244.3.107 username-k8s1-node1 <none> <none>

px-prometheus-operator-59b98b5897-xxxxx 1/1 Running 0 3m3s 10.244.1.104 username-k8s1-node0 <none> <none>

Note the name of one of your px-cluster pods. You'll run pxctl commands from these pods in following steps.

Verify Portworx cluster status

You can find the status of the Portworx cluster by running pxctl status commands from a pod. Enter the following oc exec command, specifying the pod name you retrieved in the previous section:

oc exec px-cluster-1c3edc42-4541-48fc-b173-xxxx-xxxxxxxxxxxxx -n portworx -- /opt/pwx/bin/pxctl status

Defaulted container "portworx" out of: portworx, csi-node-driver-registrar

Status: PX is operational

Telemetry: Disabled or Unhealthy

Metering: Disabled or Unhealthy

License: Trial (expires in 31 days)

Node ID: 788bf810-57c4-4df1-xxxx-xxxxxxxxxxxxx

IP: 192.168.121.99

Local Storage Pool: 1 pool

POOL IO_PRIORITY RAID_LEVEL USABLE USED STATUS ZONE REGION

0 HIGH raid0 3.0 TiB 10 GiB Online default default

Local Storage Devices: 3 devices

Device Path Media Type Size Last-Scan

0:1 /dev/vdb STORAGE_MEDIUM_MAGNETIC 1.0 TiB 14 Jul 22 22:03 UTC

0:2 /dev/vdc STORAGE_MEDIUM_MAGNETIC 1.0 TiB 14 Jul 22 22:03 UTC

0:3 /dev/vdd STORAGE_MEDIUM_MAGNETIC 1.0 TiB 14 Jul 22 22:03 UTC

* Internal kvdb on this node is sharing this storage device /dev/vdc to store its data.

total - 3.0 TiB

Cache Devices:

* No cache devices

Cluster Summary

Cluster ID: px-cluster-1c3edc42-xxxx-xxxxxxxxxxxxx

Cluster UUID: 33a82fe9-d93b-435b-xxxx-xxxxxxxxxxxxx

Scheduler: kubernetes

Nodes: 2 node(s) with storage (2 online)

IP ID SchedulerNodeName Auth StorageNode Used Capacity Status StorageStatus Version Kernel OS

192.168.121.196 f6d87392-81f4-459a-xxxx-xxxxxxxxxxxxx username-k8s1-node0 Disabled Yes 10 GiB 3.0 TiB Online Up 2.11.0-81faacc 3.10.0-1127.el7.x86_64 CentOS Linux 7 (Core)

192.168.121.99 788bf810-57c4-4df1-xxxx-xxxxxxxxxxxxx username-k8s1-node1 Disabled Yes 10 GiB 3.0 TiB Online Up (This node) 2.11.0-81faacc 3.10.0-1127.el7.x86_64 CentOS Linux 7 (Core)

Global Storage Pool

Total Used : 20 GiB

Total Capacity : 6.0 TiB

The Portworx status will display PX is operational if your cluster is running as intended.

Verify pxctl cluster provision status

-

Find the storage cluster, the status should show as

Online:oc -n portworx get storageclusterNAME CLUSTER UUID STATUS VERSION AGE

px-cluster-1c3edc42-4541-48fc-xxxx-xxxxxxxxxxxxx 33a82fe9-d93b-435b-xxxx-xxxxxxxxxxxx Online 2.11.0 10m -

Find the storage nodes, the statuses should show as

Online:oc -n portworx get storagenodesNAME ID STATUS VERSION AGE

username-k8s1-node0 f6d87392-81f4-459a-xxxx-xxxxxxxxxxxxx Online 2.11.0-81faacc 11m

username-k8s1-node1 788bf810-57c4-4df1-xxxx-xxxxxxxxxxxxx Online 2.11.0-81faacc 11m -

Verify the Portworx cluster provision status . Enter the following

oc execcommand, specifying the pod name you retrieved in the previous section:oc exec px-cluster-1c3edc42-4541-48fc-b173-xxxx-xxxxxxxxxxxxx -n portworx -- /opt/pwx/bin/pxctl cluster provision-statusDefaulted container "portworx" out of: portworx, csi-node-driver-registrar

NODE NODE STATUS POOL POOL STATUS IO_PRIORITY SIZE AVAILABLE USED PROVISIONED ZONE REGION RACK

788bf810-57c4-4df1-xxxx-xxxxxxxxxxxx Up 0 ( 96e7ff01-fcff-4715-xxxx-xxxxxxxxxxxx ) Online HIGH 3.0 TiB 3.0 TiB 10 GiB 0 B default default default

f6d87392-81f4-459a-xxxx-xxxxxxxxx Up 0 ( e06386e7-b769-xxxx-xxxxxxxxxxxxx ) Online HIGH 3.0 TiB 3.0 TiB 10 GiB 0 B default default default

Create your first PVC

For your apps to use persistent volumes powered by Portworx, you must use a StorageClass that references Portworx as the provisioner. Portworx includes a number of default StorageClasses, which you can reference with PersistentVolumeClaims (PVCs) you create. For a more general overview of how storage works within Kubernetes, refer to the Persistent Volumes section of the Kubernetes documentation.

Perform the following steps to create a PVC:

-

Create a PVC referencing the

px-csi-dbdefault StorageClass and save the file:kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: px-check-pvc

spec:

storageClassName: px-csi-db

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi -

Run the

oc applycommand to create a PVC:oc apply -f <your-pvc-name>.yamlpersistentvolumeclaim/px-check-pvc created

Verify your StorageClass and PVC

-

Enter the following

oc get storageclasscommand, specify the name of the StorageClass you created in the steps above:oc get storageclass <your-storageclass-name>NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

px-csi-db pxd.portworx.com Delete Immediate false 24mocwill return details about your storageClass if it was created correctly. Verify the configuration details appear as you intended. -

Enter the

oc get pvccommand, if this is the only StorageClass and PVC you've created, you should see only one entry in the output:oc get pvc <your-pvc-name>NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

px-check-pvc Bound pvc-dce346e8-ff02-4dfb-xxxx-xxxxxxxxxxxxx 2Gi RWO example-storageclass 3m7socwill return details about your PVC if it was created correctly. Verify the configuration details appear as you intended.